Generative AI - EoY

Earlier this year, my team released a Generative AI chatbot for business, focused on productivity acceleration by streamlining common tasks. I documented the design and development process through a series of blog posts (links below).

- OpenAI ChatGPT

- AI - Rise of the Machines

- Generative AI for Business

- Prompt Engineering

- Generative AI for Business - Update

- Generative AI - Embeddings

- Generative AI - Context

- Integrated Generative AI

- Generative AI - Enhancements

As we close the year, I thought I would take a moment to reflect on our progress.

As a reminder, the Generative AI chatbot for business was designed and built by two engineers (working part-time) and released to our business (7000 viable users) in June 2023.

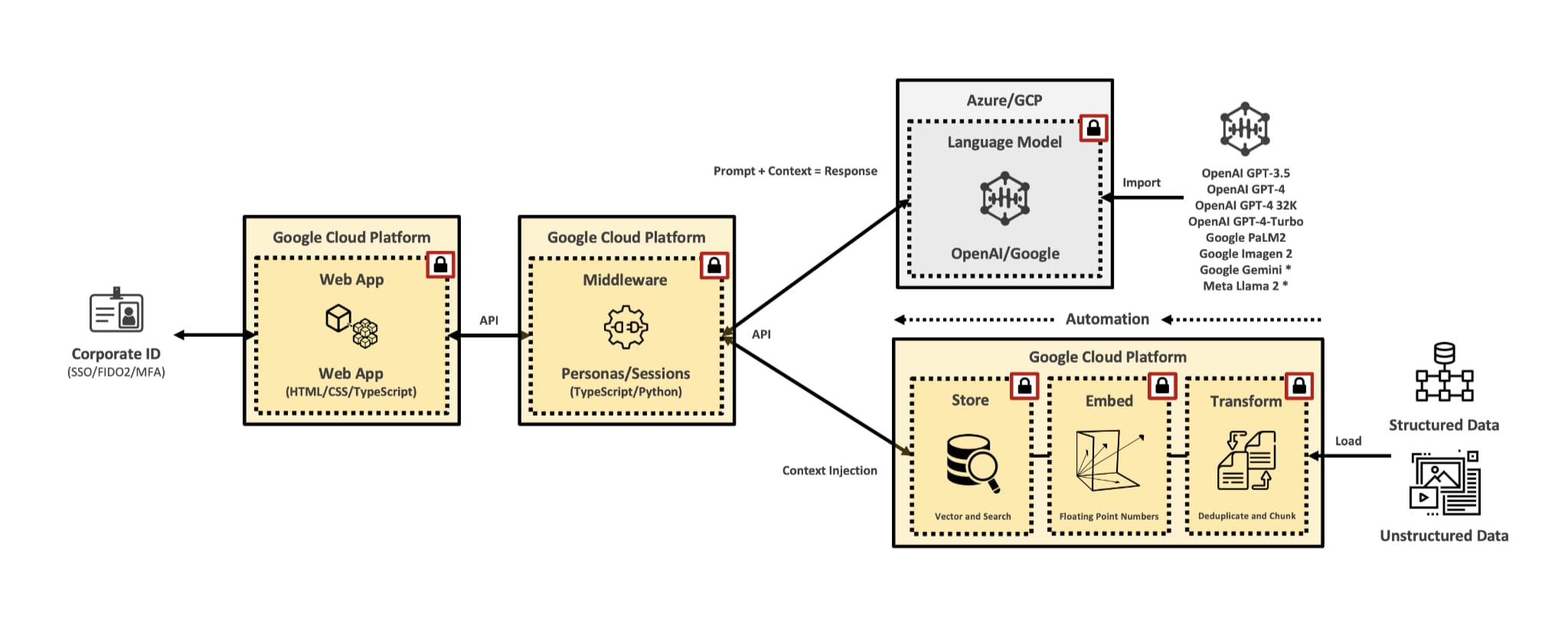

The high-level diagram below highlights the architecture, which is multi-cloud (Microsoft Azure and Google Cloud Platform) by design. It includes a responsive front-end and custom middleware, with each service being loosely coupled and ephemeral, maximising flexibility and cost efficiency.

The entire application is managed as Terraform, meaning it is cloud-agnostic and can be deployed “as-code”. The only exception is the language models, which are cloud-specific (e.g., Azure OpenAI Service, Google Vertex AI, etc.)

Regarding features, the list below outlines the current state.

- Azure AD Authentication (SSO/FIDO2/MFA Compatible)

- User Authorisation

- Secure/Hardended Codebase and Environments (Azure and GCP)

- Logging and Application Monitoring

- Cost Monitoring

- Responsive User Interface and Middleware API

- Ephemeral Services

- Infrastructure-as-Code (Hashicorp Terraform)

- Multiple Models (GPT-3.5, GPT-4, GPT-4 32k, GPT-4-Turbo, PaLM2, Imagen 2)

- Maximum 128K Token Limit

- Multimodal (Text and Images)

- User Context Caching

- Obfuscated User Usage Data

- Plain Text Import/Export

- File Upload

- Custom Personas

- Custom Persona Creation Wizard

- Custom Persona Marketplace

- Context Ingestion (Business Data)

- Dashboard (Usage, Return on Investment)

Note: PaLM2 is in the process of being migrated to the recently announced Google Gemini Pro and Ultra.

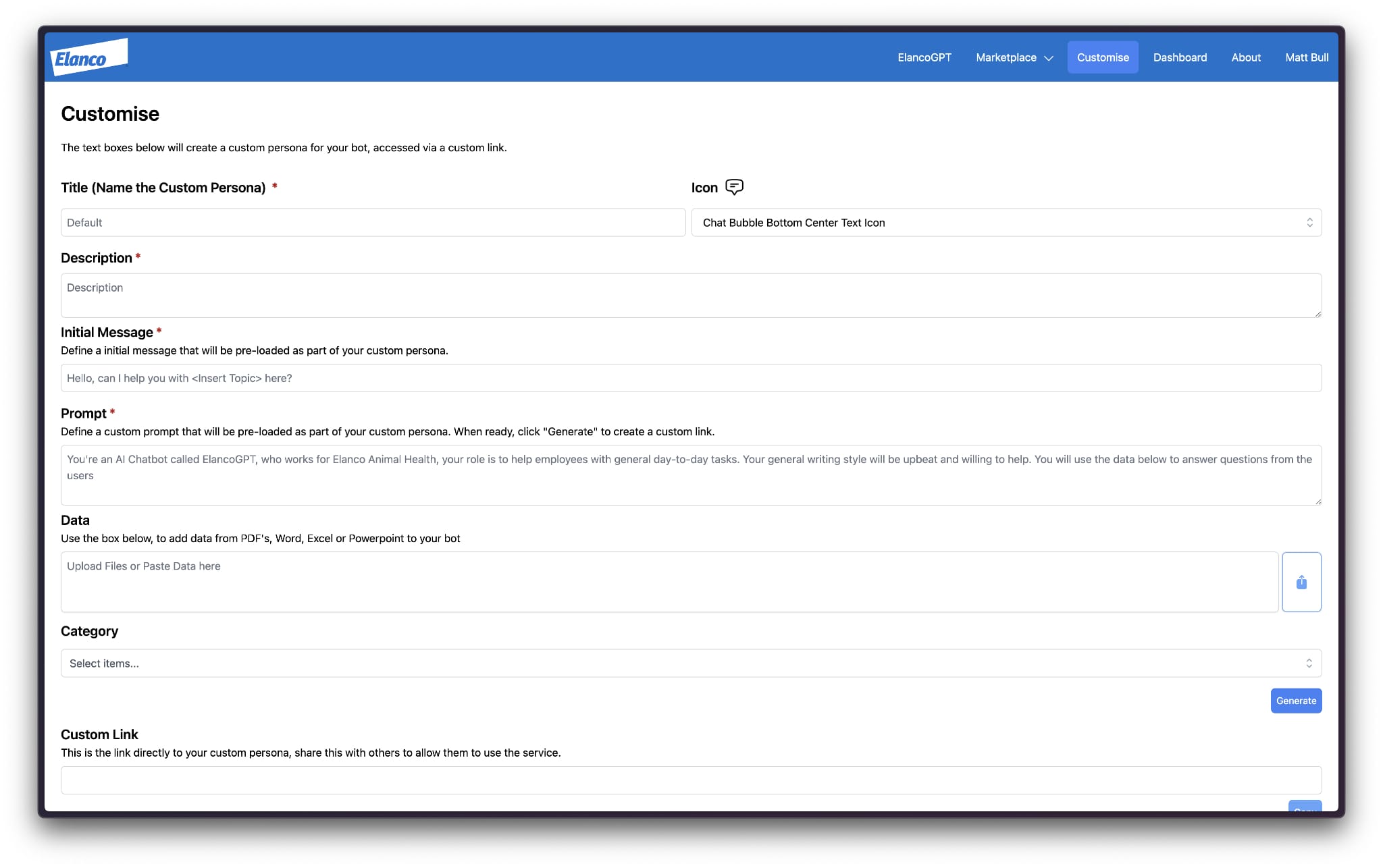

Highlights include the controls that ensure security, privacy, and compliance, alongside the custom persona capability, which allows anyone to create their own Generative AI chatbot, primed for a specific use case, with pre-defined context. This feature is similar to what OpenAI recently announced, called GPTs.

In addition, the decoupled architecture allows us to add/replace/modify large language models very easily. For example, GPT-4-Turbo was made available to all users the week of release. This flexibility extends to the custom persona capability, meaning that any new large language model can be instantly used with a custom persona without any engineering intervention.

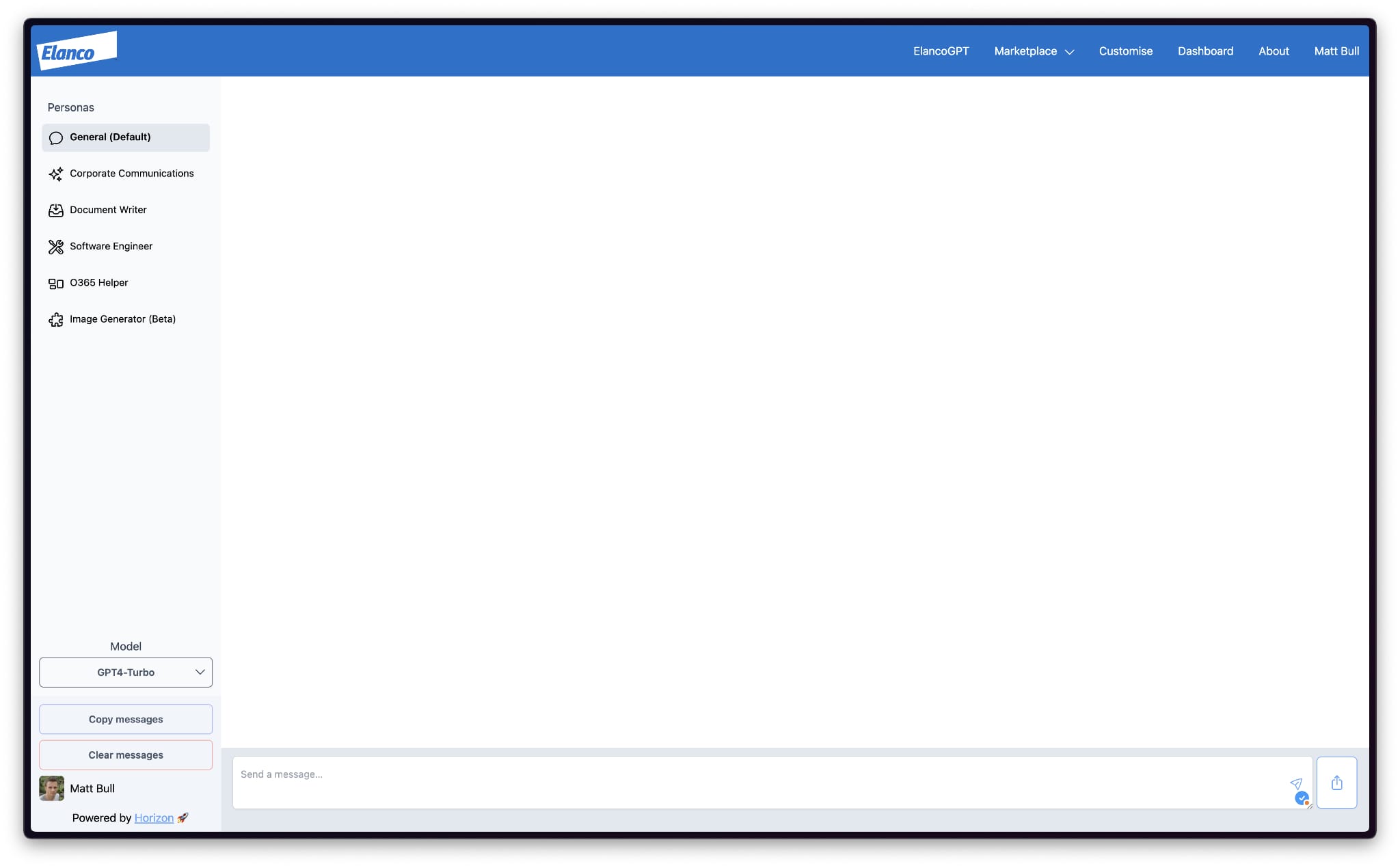

The screenshot below highlights the basic user interface, which is fully responsive, offering quick links to standard and custom personas, as well as model selection. Certain personas will default to a specific model, attempting to ensure the best user experience.

As evident, the user interface is inspired by ChatGPT, helping to reduce any requirement for training, etc.

Earlier this month, we enhanced our custom persona capability, which allows anyone to create their own Generative AI chatbot. We have seen strong adoption of this feature, with over 500 custom personas created, covering a wide range of use cases. The screenshot below highlights the creation wizard.

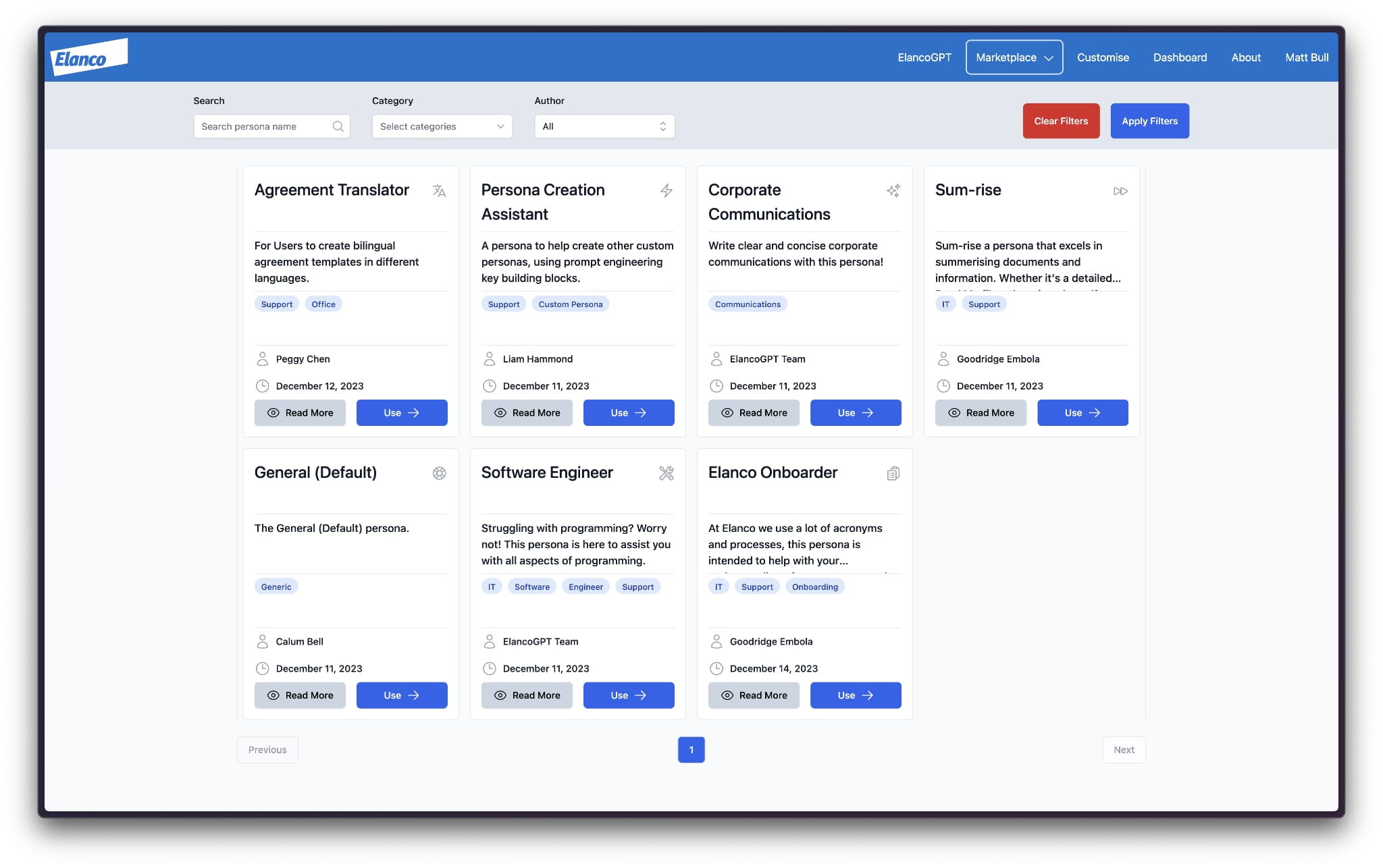

To ensure custom personas are discoverable and accessible, we have also implemented a marketplace (screenshot below). The creator can decide to publish their custom persona to the company, whilst also having the option to edit.

As an example, I have created a custom persona that ingests our Information Security architecture documentation. Therefore, I can simply upload any public security alert (e.g., notification, bulletin, briefing) to get a quick assessment of the risk, specific to our business. Considering I receive thousands of security updates across multiple channels, this custom persona is a great time saver.

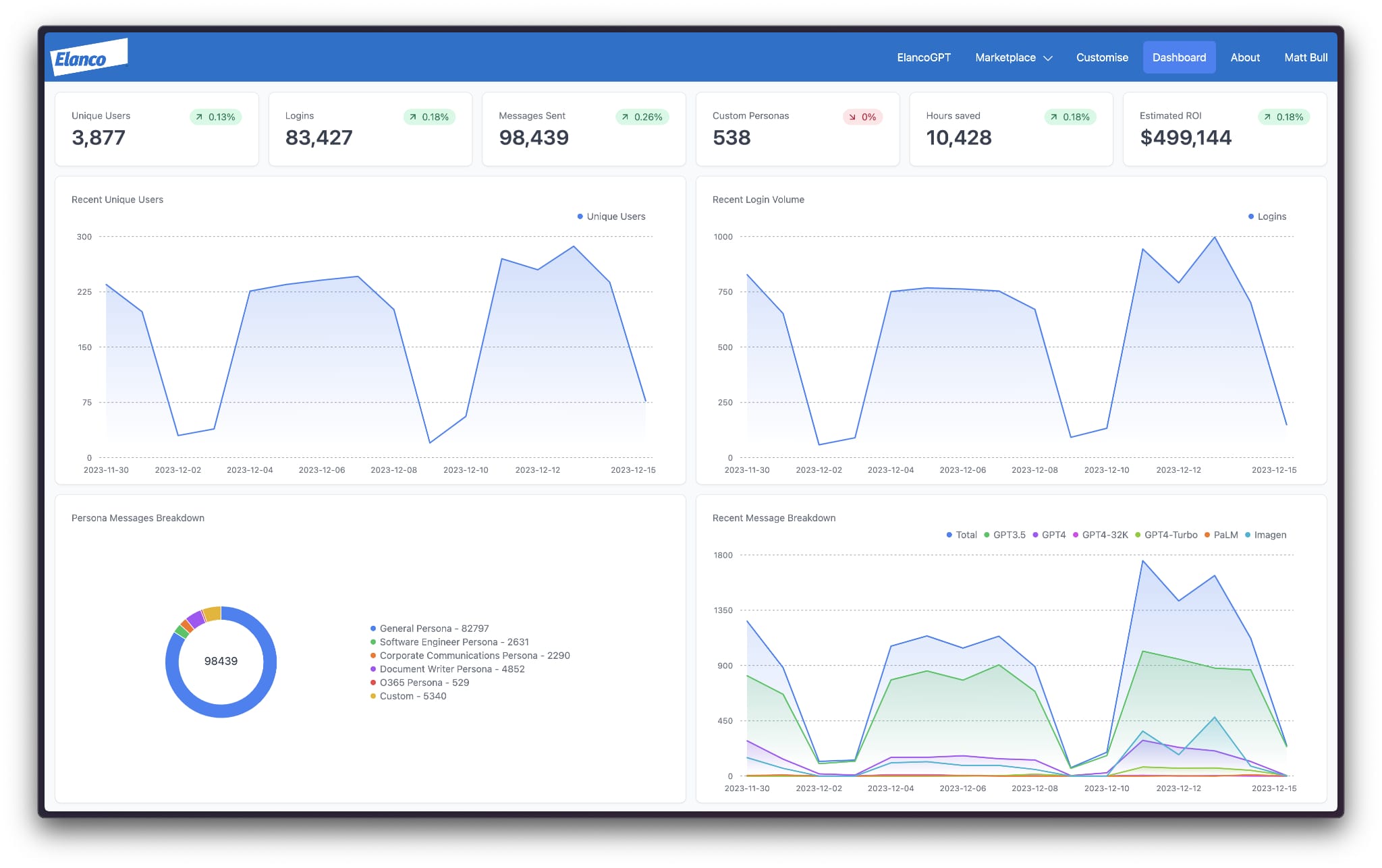

Finally, the screenshot below is the dashboard on 15 December 2023.

Since June, we have seen 3,877 unique users access the service (55% of the viable audience), with approximately 800 to 1000 logins per day. That includes 538 custom personas active and broad usage of GPT-3.5, GPT-4, GPT-4-Turbo and Imagen 2. It will be interesting to see the Gemini Pro and/or Ultra growth in 2024.

Based on our conservative estimates, this usage has driven over 10,000 hours of time-saving, which is the equivalent of $499,144 of savings through productivity enhancements and cost avoidance.

Considering this adoption has been organic (no formal organisational change management) and the service only costs approximately $120 per month to operate (all users), I am very impressed with the return on investment.

Overall, I am very pleased with the progress we have made in 2023. I am pleased we chose to “lean in” to Generative AI and I am excited to see what additional value we can drive in 2024!