Generative AI - Embeddings

Update - I have published three new articles, covering our progress regarding the enablement of context injection (embeddings) at scale, an overview of our latest enhacements and an end of year summary.

Over the past couple of months, my team has been developing a Generative AI Chatbot for business, designed to accelerate productivity by streamlining common tasks.

The following articles document the development process, including the approach, features and architecture.

- OpenAI ChatGPT

- AI - Rise of the Machines

- Generative AI for Business

- Prompt Engineering

- Generative AI for Business - Update

In mid-June, following an early adopter phase, we released the Generative AI Chatbot to all employees and contractors. We did not formally communicate the release, instead relying upon a one-hour virtual “Tech Talk” that was attended by 480 participants.

In the first few weeks, we have seen impressive adoption, with 1134 unique users, resulting in 9022 logins.

As evidence of the engagement, our “Custom Persona” feature has been used to create 1108 specific Generative AI Chatbots, primed with business context to better support specific individual/team outcomes (e.g., R&D, Manufacturing, Commercial, Communications, Legal, Engineering, etc.)

It should be noted, our architecture is multi-tenant, meaning we have a single instance of the service, serving all users/teams, including all custom personas.

The total viable audience is 14,000 users (number of corporate identities). However, when considering the applicable roles, the target audience reduces to approximately 7,000 users.

Therefore, we have seen organic growth to 16.2% adoption, achieved with no formal organisational change management.

To understand the business value, let’s assume each login saved the user a minimum of 10 minutes. That is a total of 1504 hours (63 days) of productivity returned to the business. At an assumed hourly wage of $48, the productivity return equates to $72,192.

The production service (e.g., web app, middleware, model, data) costs just $105.74 per month to operate, delivering an impressive return on investment.

This early success has energised the team to unlock the next phase of value, achieved via context injection at scale.

Context Injection at Scale (Embeddings)

In my previous article, I outlined our strategy to use traditional data tagging, search and prompt stuffing to add business context.

Unfortunately, this strategy has proven less effective than we had originally hoped, with inconsistent responses that (so far) have failed to meet our quality assurance expectations. For example, unrelated and/or irrelevant information is confidently presented to the user (hallucination).

As a result, we are exploring a more complex architecture, leveraging a technique known as “embeddings”, which groups similar information using floating point numbers. The embeddings can then be used to search for relevant information, injecting the response into the prompt.

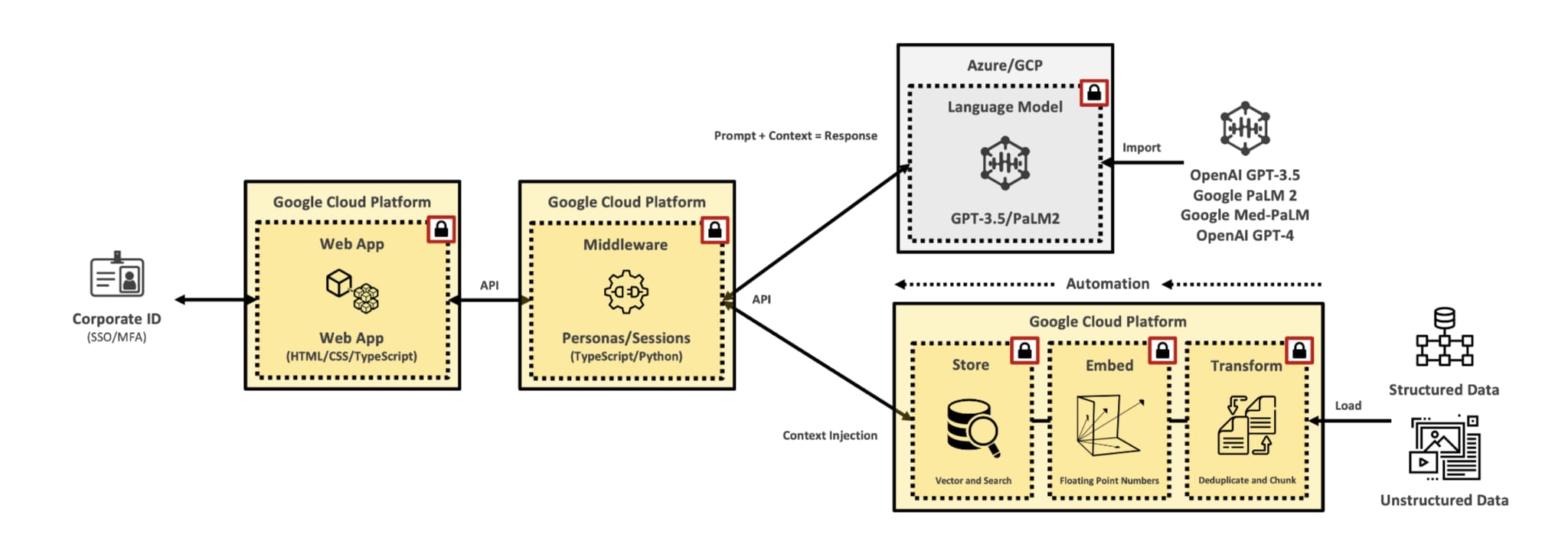

The “marchitecture” diagram below highlights the “embeddings” design we have implemented.

As highlighted by the diagram, we have implemented a four-phase architecture to inject context, specifically:

-

Load: Ingest structured and/or unstructured data from source systems.

-

Transform: Data quality is important. Therefore, depending on the data type, it must be deduplicated, simplified and/or chunked. For example, splitting large multi-page documents into individual pages. We are also experimenting with converting documents to Markdown or MDX, which can help with formatting, identifying links, code snippets, etc.

-

Embed: Embeddings help ensure relevant information is injected into the prompt. Embeddings take text (from the transform phase), converting it to a vector (list) of floating point numbers. The distance between two vectors calculates their relatedness, essentially grouping similar (relevant) information.

-

Store: The embeddings are stored in a vector database (for example, pgvector). This database is searched by taking an input prompt, creating an embedding, and completing a similarity search to find relevant information.

We are currently testing this strategy in non-production against a range of data types, targeting our internal knowledge articles, policies, etc. Although the architecture is higher complexity, our initial results have been positive, delivering more consistent responses and better accuracy.

I will post another update once our testing has concluded.