Implementing Zero Trust

Zero Trust was first referenced in 1994. However, it was not until 2001 that the modern definition of Zero Trust started to emerge. In 2023, Zero Trust is a common “buzz term” across cybersecurity, frequently referenced by vendors, researchers, analysts, etc.

I am an advocate of Zero Trust, superseding the now inadequate moat/castle strategy. Over the years, I have written a few articles about Zero Trust, as well as partnered with Microsoft to promote the core principles and concepts.

The terminology “Zero Trust” can be confusing. Therefore, when asked, I usually respond with the following definition.

Zero Trust is a strategy for IT security, which assumes that internal and external threats always exist and that all networks are inherently hostile.

The traditional concept of a perimeter is disregarded, shifting the emphasis to Identity Access Management (IAM), where all connections accessing business assets must first be authenticated and authorised.

In this scenario, IT services are individually secured and monitored at source, following the Principle of Least Privilege (PoLP).

Therefore, a holistic security posture can be maintained regardless of the user or IT service location, with appropriate threat identification and blast radius controls.

The challenge with this definition (and Zero trust in general) is how it can be tangibly applied within a business, specifically an enterprise business.

Therefore, this article will aim to provide more context to Zero Trust, highlighting how I position and apply the core principles and concepts.

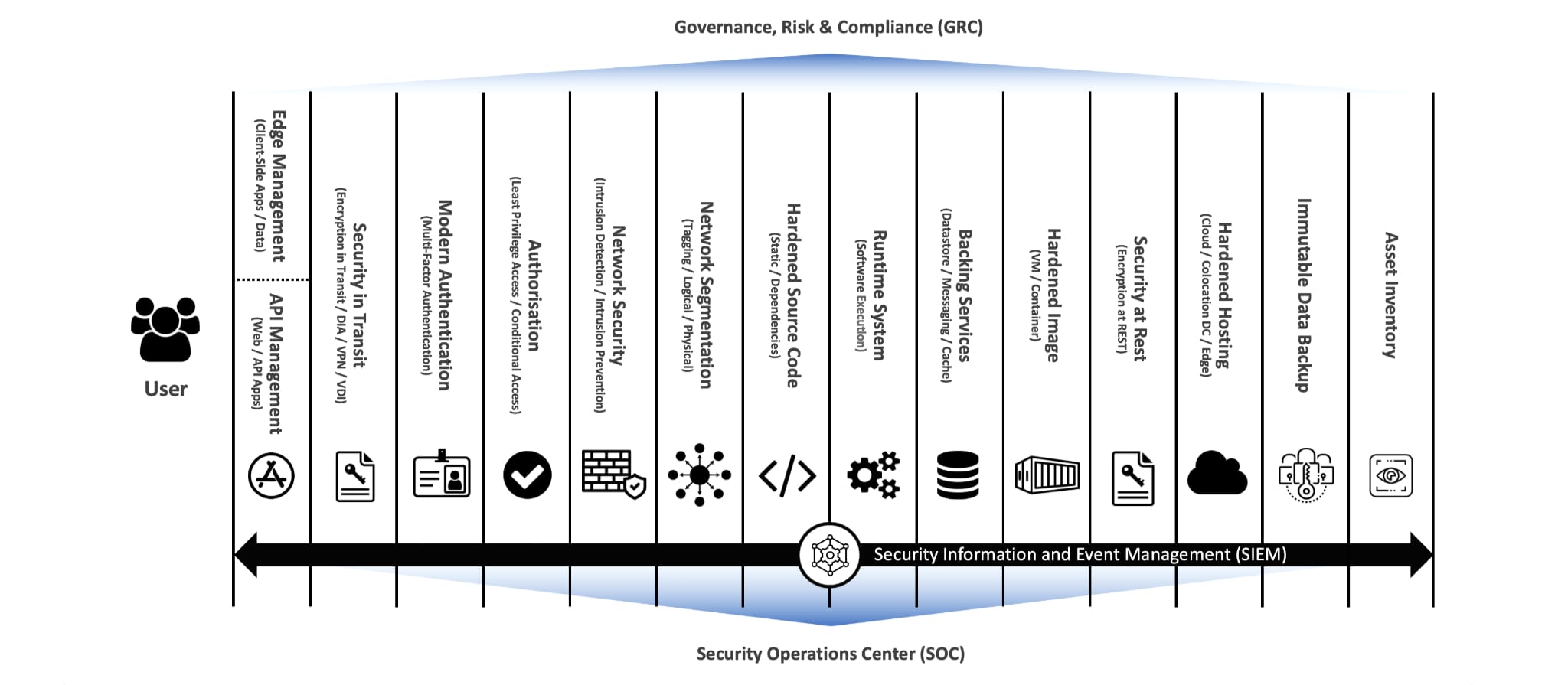

The diagram below is a high-level architecture overview, outlining the layers that contribute to the security model.

NOTE: This high-level architecture overview will not apply to every business. It is my perspective, which can be used as a guide (starting point).

From left to right, the diagram starts with the user (e.g., employee, contractor, customer) and proceeds through layers of security to access the desired runtime system (application), supported by a range of back office services (hosting, backup, asset inventory, etc.)

Vertically, the diagram highlights the need for holistic Governance, Risk and Compliance (GRC), as well as unified Security Operations, ideally fed via a standardised information/event management architecture.

The bullets below provide a short description for each layer, which in a perfect world would be enabled through programmatically defined automation.

This approach to automation allows policy directives and standards to be enforced proactively and consistently as controls, managed via code that can be easily verified to ensure compliance.

The bullets below describe each layer.

-

Edge Management: In the context of client-side (non-web) applications/data, specific capabilities to discover, manage and protect the user endpoint or the specific user endpoint applications and data. For example, Endpoint Management and/or Mobile Application Management (MAM).

-

API Management: In the context of modern web-first applications/data, specific capabilities to document, discover, access, manage, orchestrate, scale and secure API endpoints.

-

Security in Transit: Encryption in transit for modern web-first applications/data, alongside secure remote access capabilities to interact with services that are not web-first or Internet-facing. For example, Secure Access Service Edge (SASE).

-

Modern Authentication: Specific capabilities to prove an assertion, such as the identity of a user or computer system, including Identity Governance Lifecycle, Multi-Factor Authentication and Public Key Infrastructure.

-

Authorisation: Specific capabilities to manage access rights/privileges to services, with an emphasis on Least Privileged Access (specifically Privileged Access), supported by dynamic Conditional Access.

-

Network Security: Network-based capabilities with application-layer (Layer 7) inspection and management, supporting DNS/DHCP, as well as macro and micro-segmentation of secure zones. Segmentation is especially valuable for environments that must meet specific availability and/or compliance requirements.

-

Hardened Source Code: A case base tracked in version control, deployed via a standard pipeline that includes controls to proactively enforce quality, privacy, and security. For example, code, dependency, secret analysis, etc.

-

Runtime System: Real-time monitoring of the runtime environment to verify the running code and dependencies are up to date and operating as designed (no configuration drift, malicious code, etc.)

-

Backing Services: Connected resources, accessed via a URL or other locator/credentials stored in a separate vault. Backing services could include data stores, caches, messaging services, etc. Supporting capabilities cover data catalogue, labelling, monitoring, loss prevention, etc.

-

Security at Rest: Encryption at rest, alongside physical security controls.

-

Hardened Hosting: Capabilities to monitor and protect the hosting environments (Cloud, Data Centre, Edge), ensuring the foundations are hardened following the pre-defined documented standards.

-

Immutable Data Backup: Data protection capabilities to support backup and recovery, including an immutable filesystem to protect against ransomware attacks.

-

Asset Inventory: A dynamically maintained list of all IT assets (endpoints, appliances, services, applications, data), including the relevant metadata to manage responsibility assignment and dependency mapping, highlighting critical assets to enable risks-based decisions.

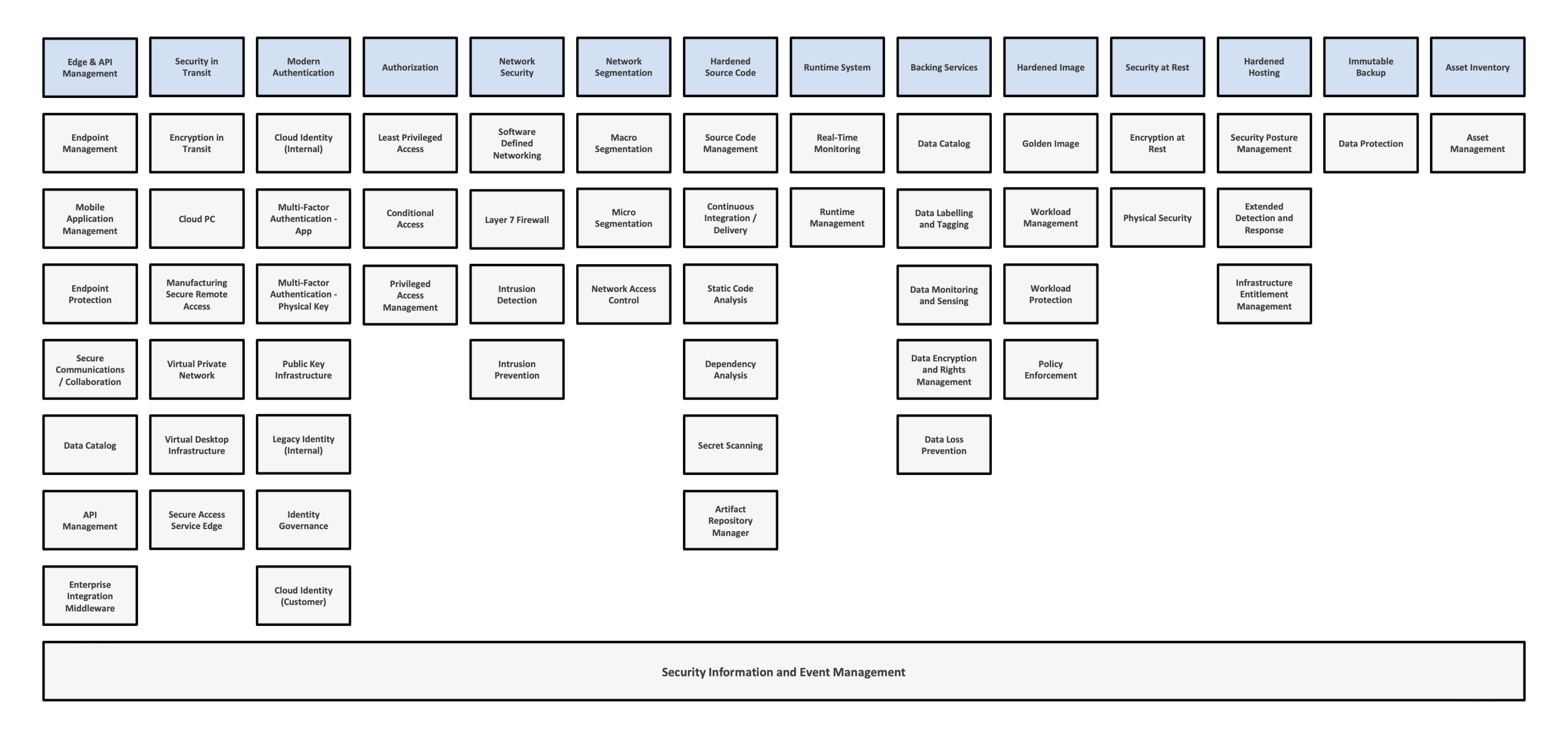

With the layers defined, a capability map can be defined (as highlighted in the diagram below). As previously stated, the required capabilities will depend on the specific business and risk posture.

That’s it! Hopefully, this information has provided more context regarding Zero Trust, specifically how it can be tangibly applied within a business. As an additional reference, I would recommend reviewing the The National Cyber Security Centre (NCSC) Zero Trust Architecture, which outlines eight key principles.