NVIDIA GeForce RTX 5090

Earlier this month, I upgraded my custom-built PC. I documented this process across two articles.

The impetus for this change was the NVIDIA GeForce RTX 5090 announcement, which felt like a worthy upgrade to my ageing NVIDIA GeForce RTX 3090 (I had skipped the NVIDIA 40 series).

Unfortunately, NVIDIA’s launch was disappointing, with many issues. Most notably, there was limited supply, an uncontrolled ordering process, and failed MSRP pricing.

Due to these issues, it was almost impossible to purchase an NVIDIA GeForce RTX 5090 at MSRP, resulting in aggressive scalping that pushed the price above £3500 (£1500 above MSRP).

In a perfect world, I would select a different graphics card. Unfortunately, there is no comparable alternative to the NVIDIA GeForce RTX 5090. Therefore, I decided to delay my purchase (selecting a AMD Radeon RX 9070 XT), until the market stabilised and/or the pricing improved.

My team recently attended the NVIDIA GPU Technology Conference (GTC). I did not expect any NVIDIA GeForce 50 series graphics cards to be on sale. However, to my surprise, a colleague was able to secure me an NVIDIA GeForce RTX 5090 Founders Edition for under MSRP (£1688.15).

Although still very expensive for a consumer-grade graphics card, this price is arguably a bargain, £250 below MSRP and upwards of £1750 below current availability pricing.

The full specification of the NVIDIA GeForce RTX 5090 Founders Edition can be found below.

- Architecture: Blackwell 2.0

- Process: TSMC 4N FinFET (5nm)

- Clocks: 2017MHz (Base) / 2407MHz (Boost)

- Shading Units: 21,760

- Texture Mapping Units: 680

- Render Output Units: 176

- Streaming Multiprocessors Count: 170

- Tensor Cores: 680

- Ray Tracing Cores: 170

- Memory: 32GB GDDR7 (512bit / 1750MHz)

- Interface: PCI Express 5.0

In short, this graphics card is a monster, likely pushing the TSMC 4N FinFET (5nm) process to its limit. This also explains the 575W power requirement, an increase of 125W from the NVIDIA GeForce RTX 4090. As a result, NVIDIA recommends a 1000W PSU or greater, with a native 12V-2x6 connection.

In addition to the increase in power, the NVIDIA GeForce RTX 5090 has 21,760 CUDA cores, approximately 33% more than the NVIDIA GeForce RTX 4090 (16,384 CUDA cores).

The NVIDIA GeForce RTX 5090 also transitions to fifth-generation Tensor and fourth-generation RT cores.

These enhancements are combined with 32GB of GDDR7 VRAM, with a 512-bit memory interface, delivering an impressive memory bandwidth of 1,792GB/s. That is a significant increase over the NVIDIA GeForce 4090 (1,008GB/s) and the ultra-fast unified memory found on the Apple M4 Max SoC (546GB/s).

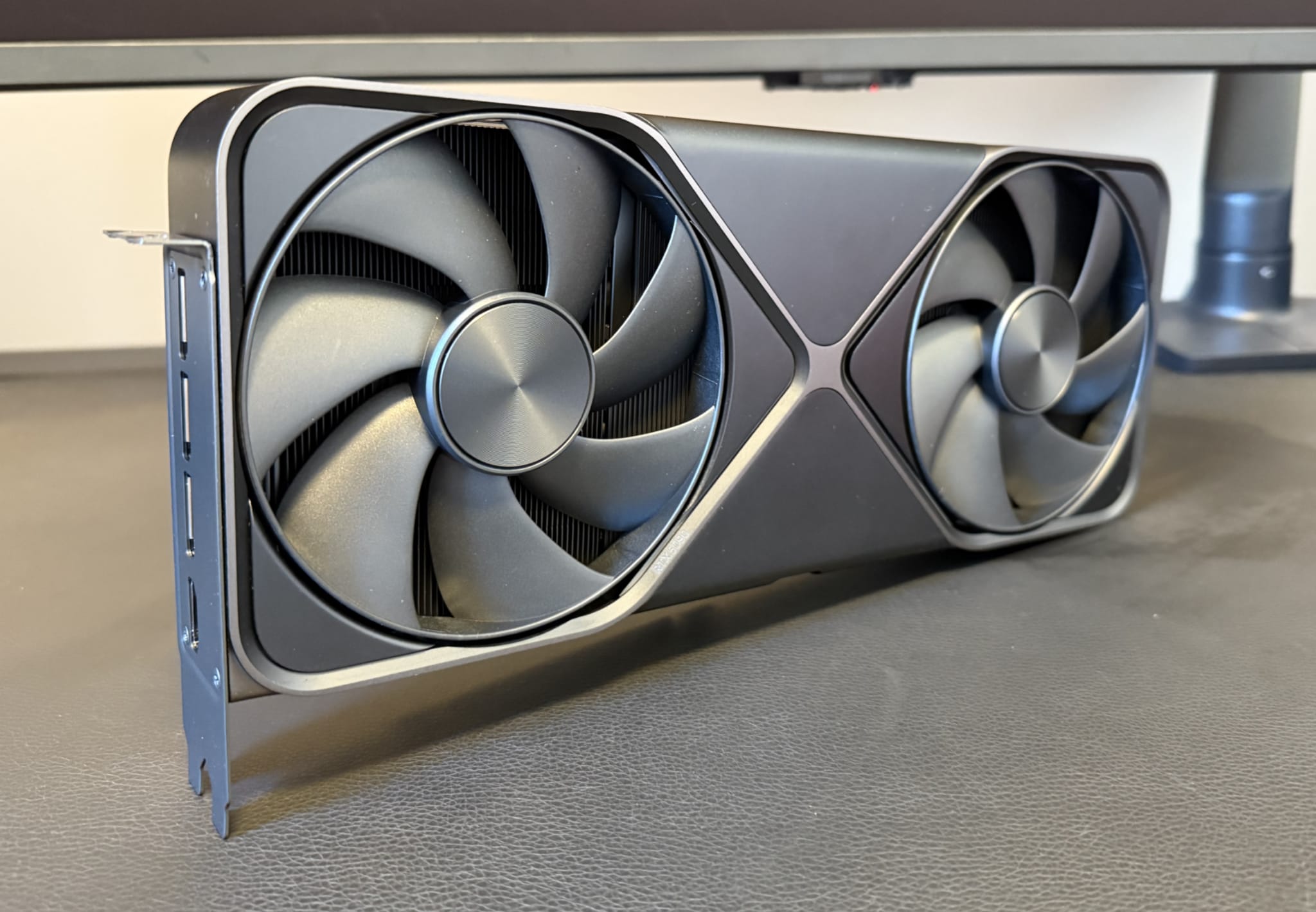

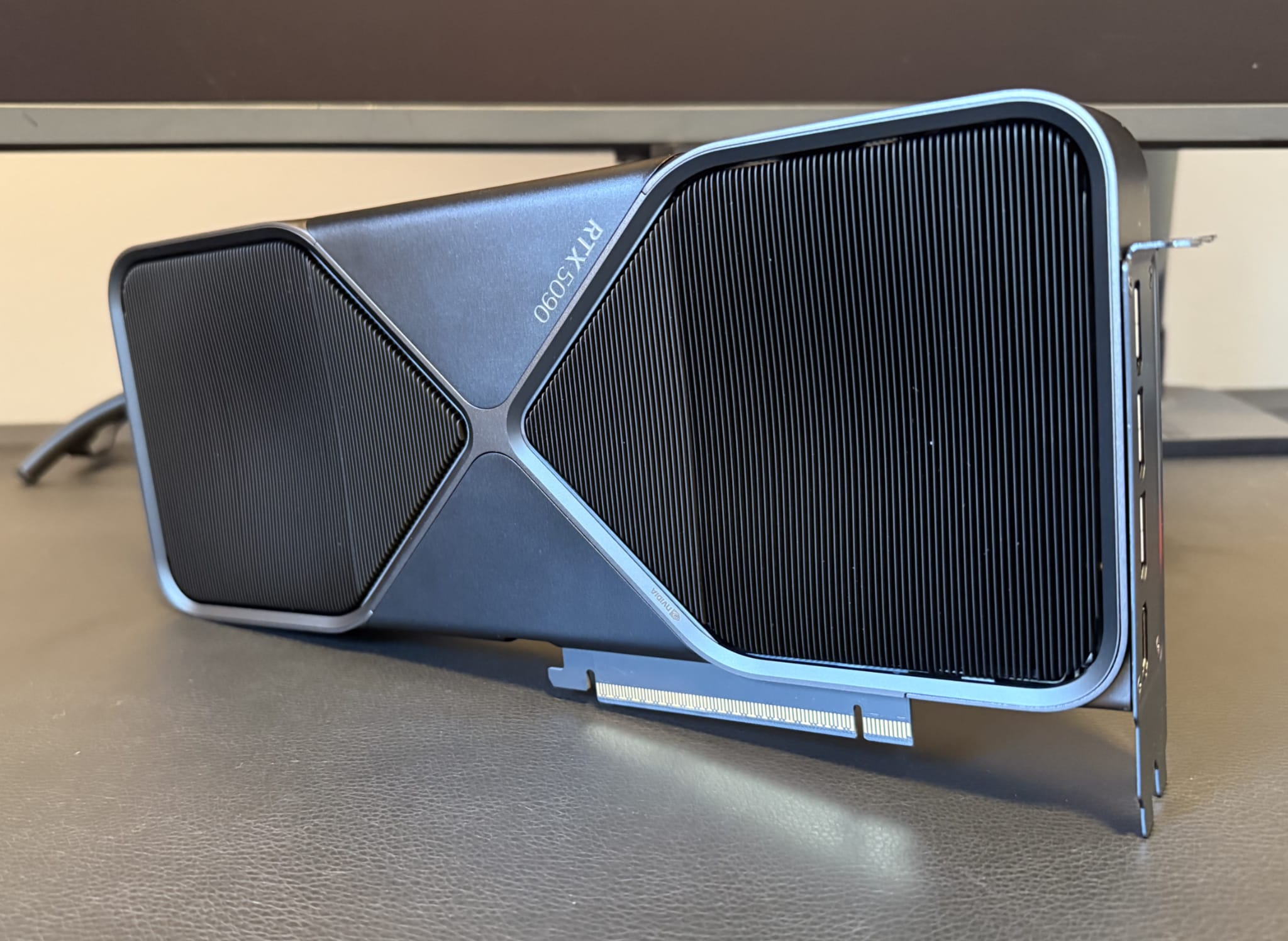

Considering the power requirements, you would assume that the NVIDIA GeForce RTX 5090 would require a large, elaborate cooling solution. Interestingly, the NVIDIA engineers have demonstrated their expertise by delivering a relatively compact, two-slot design, with a split PCB. This design appears to cool the graphics card very effectively, without any drastic compromises regarding the design, size or noise.

In my opinion, the NVIDIA GeForce RTX 5090 is the “best” built graphics card I have ever owned, with a premium design, high-quality materials and a reassuring construction.

Unsprupsingly, this specification delivers exceptional gaming performance, in line with what you would expect from a generational update that leverages the same 5nm process. With that said, it would appear most of this performance benefit is delivered via the additional 125W of power, further indicating the limitations of the TSMC 4N FinFET (5nm).

I suspect NVIDIA anticipated this limitation, prompting their heavy investment and marketing into software improvements, specifically Deep Learning Super Sampling (DLSS) 4, including Multi Frame Generation (MFG).

DLSS 4 leverages a transformer-based AI model for Super Resolution, which is also backwards compatible with the NVIDIA GeForce RTX 40/30/20 series. I consider DLSS (or equivalent) a “must-have” feature, capable of delivering incredible results.

MFG, which can generate up to three additional frames per rendered frame, is more controversial. It is also exclusive to the NVIDIA GeForce 50 series. As advertised, the technology delivers impressive FPS increases. However, it does not improve latency and can impact image quality. Therefore, I would only recommend MFG in slower-paced games (not Twitch shooters) where the base FPS is already consistently above 60.

With my Samsung G95NC Odyssey Neo G9 display at native resolution (7680x2160), in games such as Cyberpunk 2077 and Indiana Jones, I am able to max all settings, including Ray Tracing and Path Tracing (where supported), whilst still achieving an FPS of above 100. With MFG enabled, this number can easily surpass 250.

Outside of gaming performance, my interest also includes the potential for Artificial Intelligence (AI) acceleration, specifically Generative AI. This is where the CUDA core count and memory capacity/bandwidth provide significant value.

The NVIDIA GeForce 5090 supports v12.0 Compute Capabilities, with the 32GB VRAM offering enough capacity to run 12B parameter AI models natively and up to 50B parameter AI models with low quantization.

As a reminder, quantization is a technique that reduces the computational and memory requirements of AI models by representing their weights and activations with lower-precision data types, like 8-bit integers instead of 32-bit floating points, enabling faster and more efficient deployments. Lower bit quantizations represent more aggressive compression, leading to smaller model sizes but potentially higher quality loss.

Finally, the NVIDIA Encoder (NVENC) and Decoder (NVDEC) capabilities have been upgraded (ninth-generation and sixth-generation), with one additional hardware encoder for each, compared to the NVIDIA GeForce 4090. This is great news for creators and anyone working with video, allowing for more simultaneous workloads with minimal performance loss.

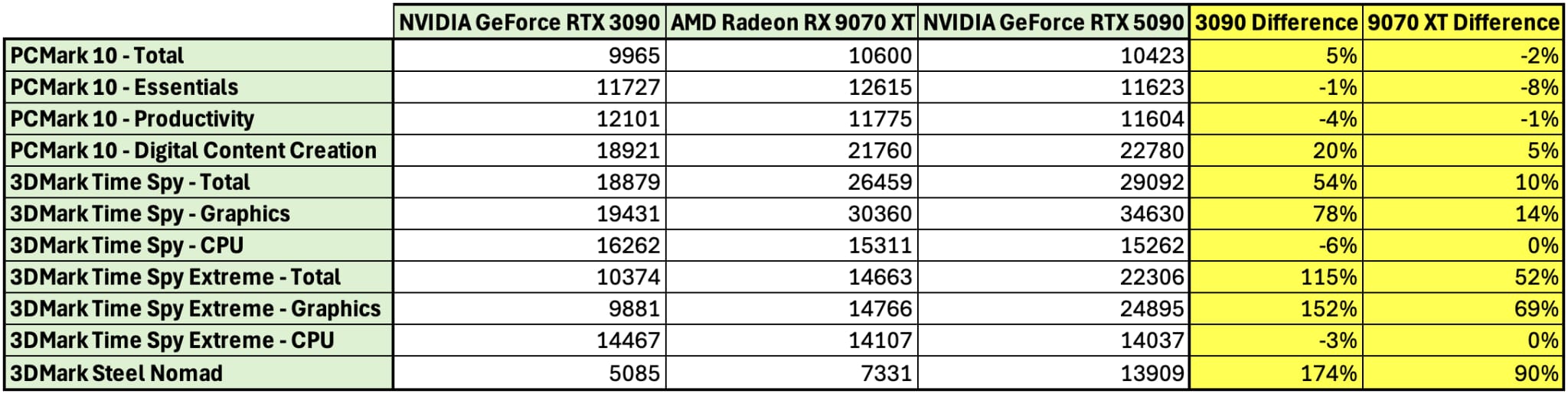

Outlined below are some high-level test results compared to my NVIDIA GeForce RTX 3090 and AMD Radeon RX 9070 XT.

NOTE: My CPU performance has degraded over time. Therefore, the CPU-centric tests are showing a minor reduction in performance.

Overall, when considering graphics-centric tests, the NVIDIA GeForce RTX 5090 outperforms my GeForce RTX 3090 by approximately 99% and the AMD Radeon RX 9070 XT by 40%.

The near 100% increase over my NVIDIA GeForce RTX 3090 is certainly welcomed, highlighting the benefit of skipping a generation. These tests are not comprehensive and the performance benefit will vary depending on the workload.

With that said, these are impressive results, demonstrating the raw horsepower of the NVIDIA GeForce RTX 5090, which is further enhanced when you enable software features, such as DLSS 4 and MFG.

In conclusion, although extremely expensive and power-hungry, I am impressed with the NVIDIA GeForce RTX 5090. I certainly would not recommend this graphics card to everyone, but if you are seeking the “best” available, the NVIDIA GeForce RTX 5090 is the current undisputed king.

I am excited to broaden my testing, specifically with complex workloads related to AI.