GitHub Copilot

Yesterday, GitHub announced a new capability known as GitHub Copilot, currently available as a Technical Preview.

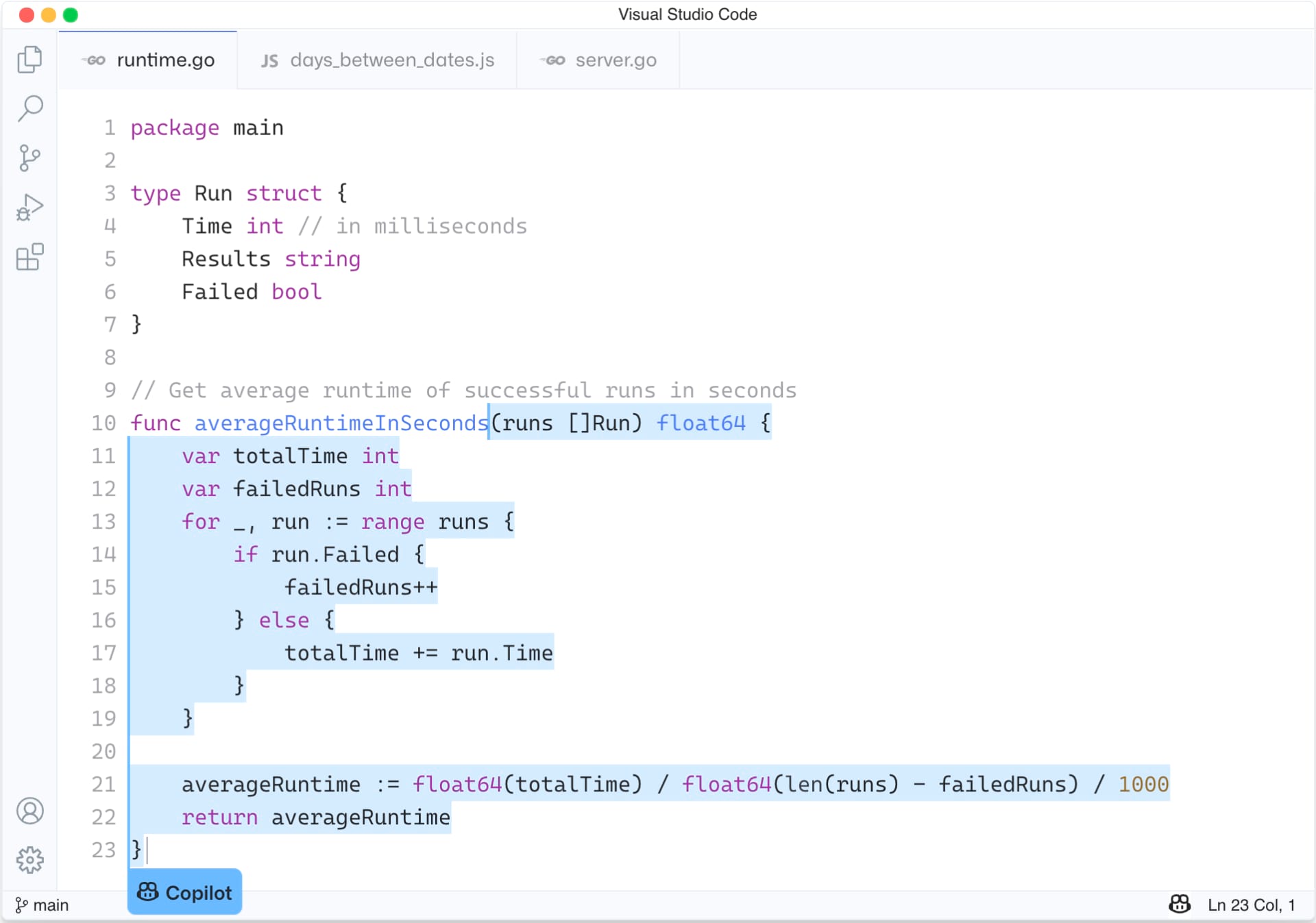

GitHub Copilot is pitched as an “AI Pair Programmer”, which goes beyond traditional code completion by suggesting full lines of code and even entire functions.

It is powered by Codex, a new AI system created by OpenAI. It uses the docstring, comments, function names, or the code itself to suggest potential code matches.

For example, it is possible to write a comment describing the desired logic, at which point GitHub Copilot will suggest the code itself.

GitHub has confirmed that they leverage publicly accessible code on GitHub to train the AI model.

This is an incredible dataset for training, as GitHub includes a massive range of examples covering almost any scenario, as well as relevant meta-data regarding the popularity of the code, etc.

Therefore, assuming GitHub Copilot works as described, the ramifications of this capability could be profound.

GitHub Copilot should improve developer speed and code quality, as well as reduce the risk of errors and bugs. It could also act as a powerful enabler for new developers, providing real-time support when starting a new project or learning a new language/framework.

An alternative perspective could be that GitHub Copilot would negatively impact the competency of the developer community, making the individuals more reliant on tooling to produce code (similar to capabilities such as spell checking and grammar).

As an optimist, I prefer to consider the positive ramifications and hope that the benefits of GitHub Copilot will allow the developer community to prioritise more complex problems, instead of sweating commodity code.

However, I suspect the most controversial aspect of this release will be the impact on Copywrite law.

For example, it is safe to assume that developers regularly search and copy code from public repositories on GitHub (as well as other sources). In theory, they should be checking and adhering to any associated licenses, but I suspect this is not common practice.

There are frequent examples of open-source code finding itself into proprietary projects without any documented acknowledgement of the source, etc.

However, with GitHub Copilot, the process of copying code has been industrialised, making it easier and therefore more prevalent. For example, if GitHub Copilot suggests code from an open-source project, is the developer ok to simply accept the suggestion? Is this any different to manually searching and copying?

This is likely less of an issue for individual developers working on royalty-free and/or personal projects, but more complicated for businesses that custom develop software.

I am a bigger believer in the open-source community, collaborating and learning from each other to solve increasingly complex problems. However, I also believe the community must be protected to ensure it remains healthy, especially individuals that have invested countless hours to progress a specific domain.

I hope GitHub have considered these points and include relevant controls to ensure that developers and any associated licenses are respected.

Finally, I commend GitHub for continuing to innovate. I see this as the first step towards true AI-assisted development, however, I predict a strong and polarising response from the community!

UPDATE (04-JUL-2021)

The team over at DevOps Directive posted an interesting video of GitHub Copilot completing a range of Leetcode interview questions.

As highlighted by the video, the results were impressive, with GitHub Autopilot successfully answering 80% of the questions.

It should be noted that I suspect GitHub Autopilot was at least partially trained using classic interview questions, which are commonly well defined and structured. Therefore, I suspect the success rate would drop significantly in scenarios with greater nuance or uncommon constraints.

Either way, the technology looks promising, assuming GitHub can navigate the privacy, legal and Copywrite challenges, whilst respecting developer contributions that help train the model.