Computer Vision - Update

Over the past few months, I have been testing Computer Vision technologies using an Nvidia Jetson Nano Developer Kit. I covered the framing, use cases, architecture and set up in my previous two articles.

This article will focus on the usage of the Jetson Nano Developer Kit to execute some basic Computer Vision workloads, covering image recognition, object detection and semantic segmentation.

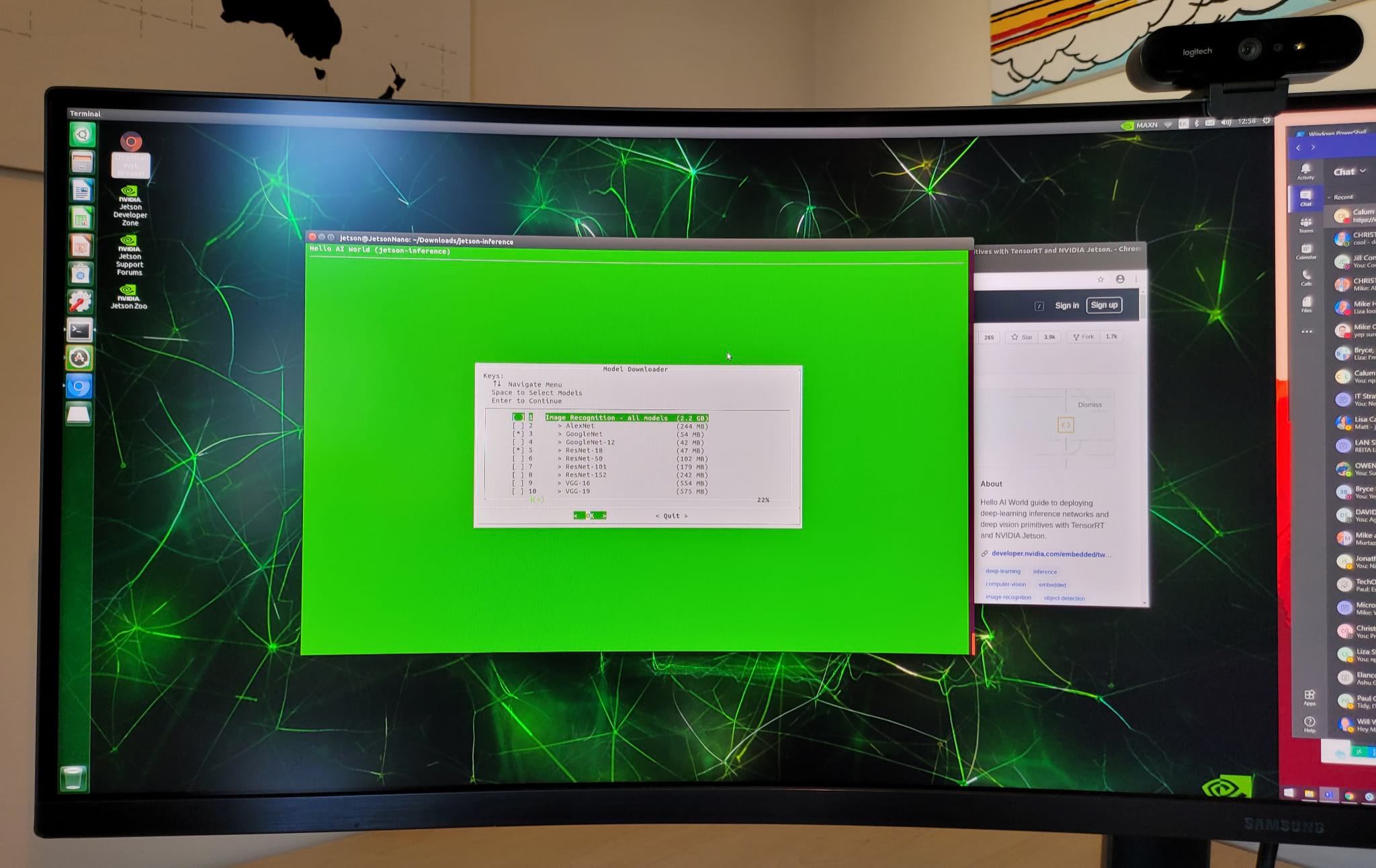

The photo below shows my Jetson Nano Developer Kit, connected to my monitor running picture-in-picture via HDMI.

To get started, I used the Inference and Realtime DNN Vision library from Dustin Franklin, which includes a range of example projects, as well as links to the Nvidia Jetson AI Courses and Certifications.

The video below covers the software configuration process, including the setup of Docker and installation of the pre-trained models.

The library comes pre-loaded with forty models, which can be managed and installed via the ‘docker/run.sh’ command.

All models are installed locally on the host and automatically mounted to the running Docker container, which makes viewing the output and future modifications much easier.

Once the initial configuration is complete, the examples projects can be loaded, covering Computer Vision primitives, such as imageNet for image recognition, detectNet for object detection, and segNet for semantic segmentation, which all inherit from the shared tensorNet object.

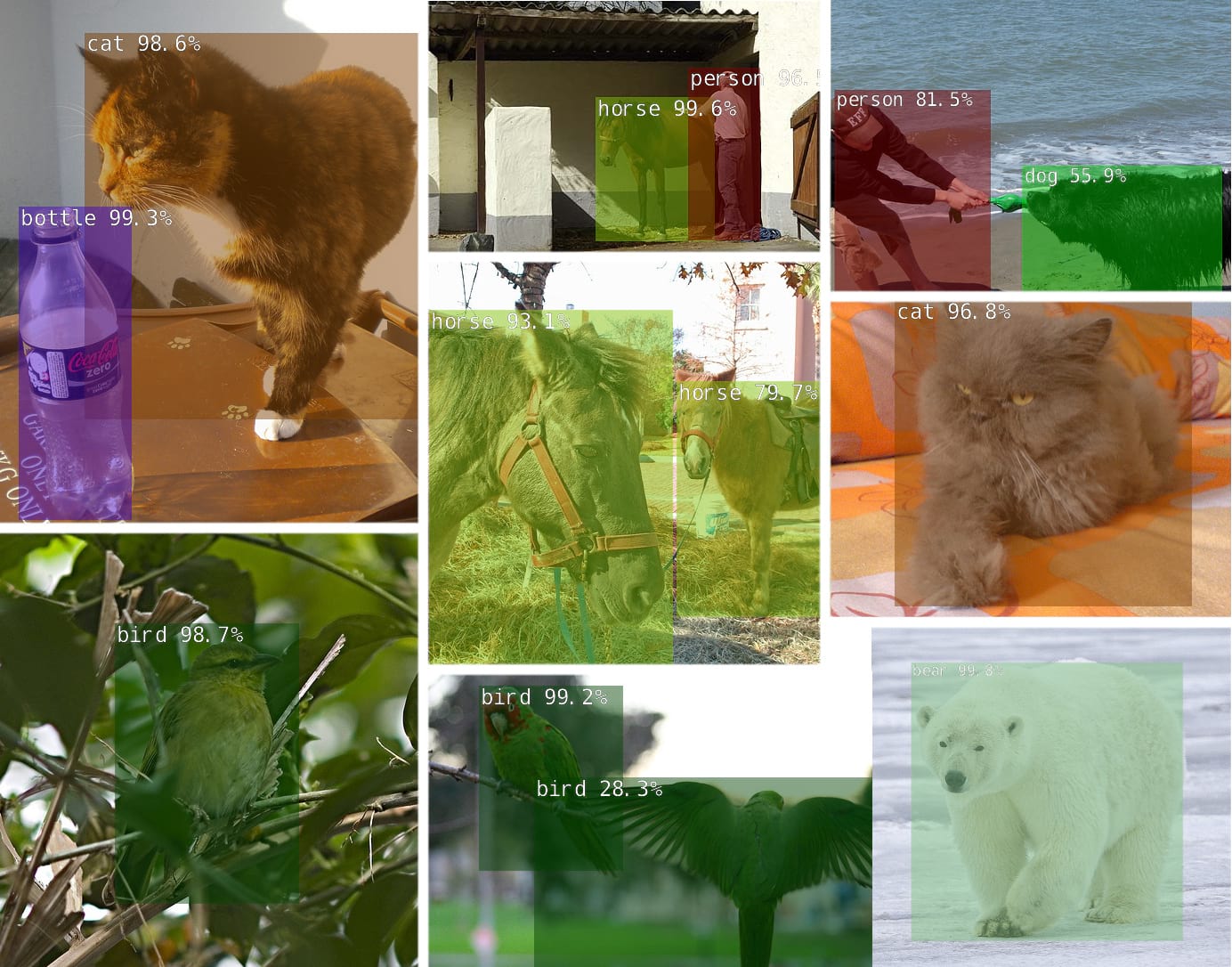

Image Recognition

The first project focuses on image recognition, which uses classification networks that have been trained on large datasets to identify scenes and objects.

The imageNet object accepts an input image and outputs the classified object name, confidence of the classified object, and the framerate.

Assuming the Docker container is running, the image detection project can be started using the following command.

./imagenet.py csi://0

The “csi://0” command assumes a MIPI-CSI Camera is installed, for example, the Raspberry Pi Camera.

The photos below highlight the image recognition project in action.

I tested a wide range of objects, including many of my son’s toys. The model was surprisingly good at detecting dinosaurs, with varying levels of confidence.

The second photo highlights higher confidence (99%) when viewing a baseball.

The average framerate for image recognition on the Jetson Nano Developer Kit was 68fps.

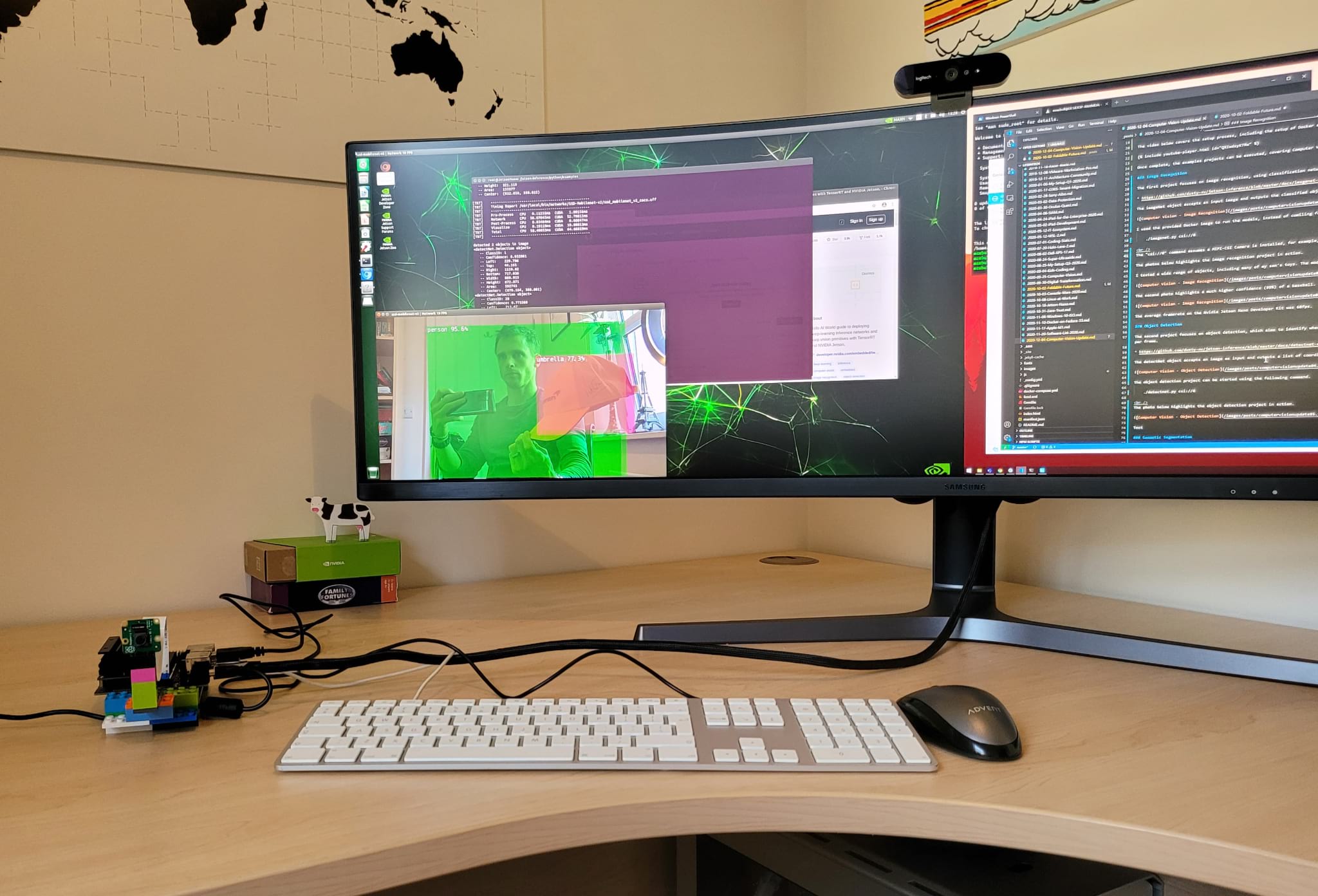

Object Detection

The second project focuses on object detection, which aims to identify where in the frame various objects are located by extracting their bounding boxes.

Unlike image recognition, object detection networks are capable of detecting multiple different objects per frame.

The detectNet object accepts an image as input and outputs a list of coordinates of the detected bounding boxes, alongside the classified object name, the confidence of the classified object, and the framerate.

The object detection project can be started using the following command.

./detectnet.py csi://0

The photo below highlights the object detection project in action.

The object detection project pushed the Jetson Nano Developer Kit a lot harder, resulting in 20fps.

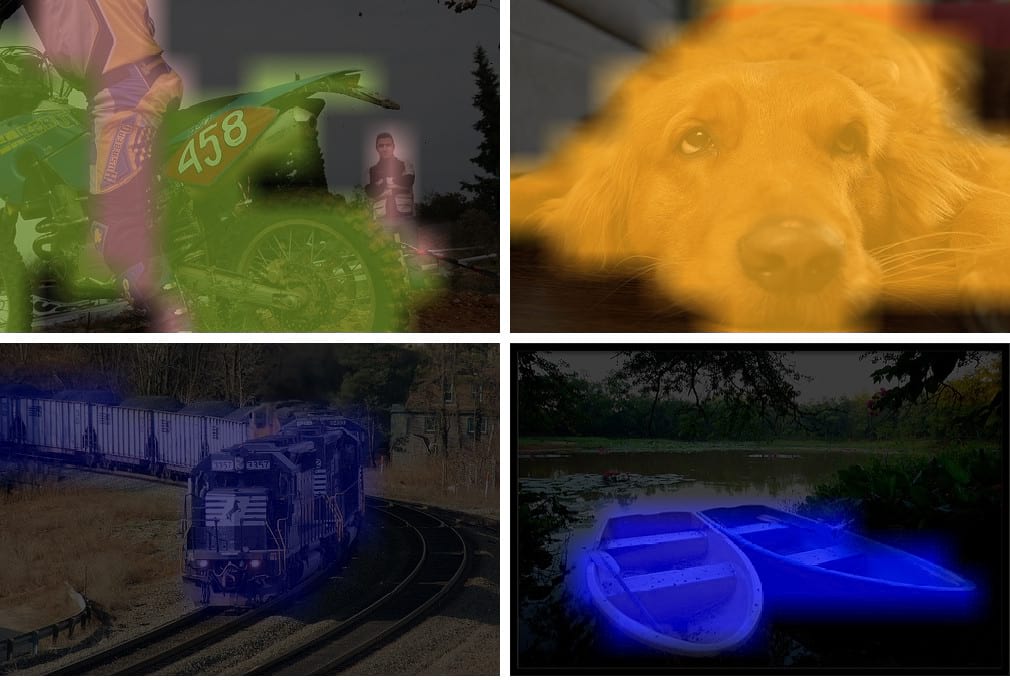

Semantic Segmentation

The final project focuses on semantic segmentation, where the classifications occur at the pixel level instead of the entire image. This technique is useful for environmental perception and images with multiple objects per scene.

The segNet object accepts an image as an input and outputs a second image with the per-pixel classification mask overlay.

The semantic segmentation project can be started using the following command.

./segnet.py --network=fcn-resnet18-mhp csi://0

The photo below highlights the semantic segmentation project in action.

I struggled to achieve clear results with this project, likely due to poor lighting and/or a lot of background noise. The Jetson Nano Developer Kit achieved an average of 28fps.

Conclusion

In conclusion, the three examples projects provide a nice foundation covering Computer Vision primitives, such as image recognition, object detection and semantic segmentation.

The Jetson Nano worked well, delivering acceptable performance considering the cost and passive cooling. It did however become very hot when running the object detection project, potentially highlighting the need for a fan.

Overall, I remain very impressed with the Jetson Nano Developer Kit and believe it is an excellent entry-point for anyone interested in exploring Artificial Technology (AI).