Service Delivery

This article is part of a series. I would recommend reading the articles in order, starting with “Modern IT Ecosystem”, which provides the required framing.

As a brief reminder, this series aims to explore the “art of the possible” if an enterprise business could hypothetically rebuild IT from the ground up, creating a modern IT ecosystem.

As a technologist, I am eager to start proposing the architecture and associated technologies. However, to ensure the series is credible, I felt it was important to first outline my approach to service delivery.

Introduction

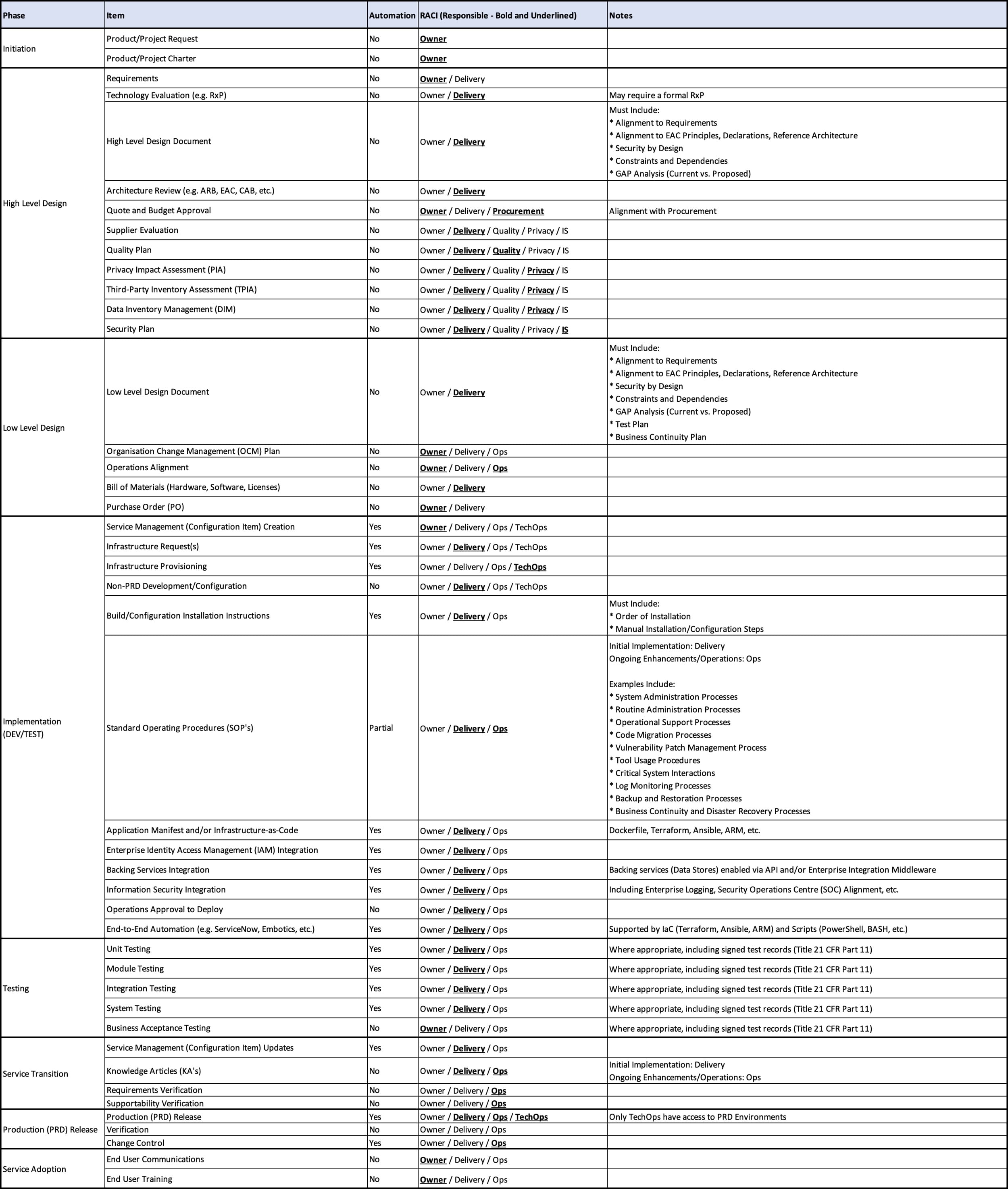

To help ensure quality and consistency, I have defined a service delivery plan, which highlights the key deliverables associated with the implementation of any new service. Depending on the services, the deliverables could be rightsized, helping to promote innovation and agility, except for services that support regulated workloads, where the ability to demonstrate a state of control would be a critical requirement.

For example, as part of this series, I defined a set of business characteristics, where I selected a regulated industry (Food and Drug). Therefore, any service supporting a “GxP” workload would need to adhere to the principles and procedures outlined by Good Automated Manufacturing Practice (GAMP).

Service Delivery Plan

Outlined below is the service delivery plan, which includes the phase, item and decision making authorities (RACI).

Although “Delivery”, “Ops” and “IS” are separate RACI roles, the service delivery plan assumes a “DevSecOps” philosophy. Therefore, security practices should be integrated within the DevOps process, meaning that “Delivery”, “Ops” and “IS” would all be actively engaged as part of the design.

The “Automation” column highlights which items would be viable for automation or facilitate application/data automation. For example, “Application Manifest and/or Infrastructure-as-Code” would be an enabler of future automation.

Where required, the Design Qualification (DQ), Installation Qualification (IQ), Operational Qualification (OQ) and Performance Qualification (PQ) would be embedded as part of the associated phases.

Finally, the service delivery plan is not limited to any specific project management methodology (e.g. Waterfall, Agile, etc.) This would allow for flexibility, depending on the characteristics and/or requirements of the specific service.

Continuous Quality

When operating within a regulated industry, all roles must have an understanding of the associated regulations and guidelines. For example, in the context of “Food and Drug”, it would be important to have a clearly defined process that facilitates Qualification and Validation.

-

Qualification: The act of proving that equipment or ancillary systems are properly installed, working as designed and comply with the specified requirements.

-

Validation: A documented objective evidence that provides a high degree of assurance that a specific process consistently produces a product meeting its predetermined specifications.

Qualification is part of the validation, but the individual qualification steps alone do not constitute process validation. For example, the infrastructure must be qualified, while the software running the processes on the infrastructure must be validated.

Many perceive achieving this outcome as a time-consuming process, however, I believe modern technologies and techniques could help streamline the process, whilst simultaneously improving quality.

Continuous Qualification

Software-Defined techniques and the use of Infrastructure-as-Code have a profound impact on the provisioning and maintenance of infrastructure. The four steps outlined below are an example of how these techniques could help to enable and maintain a Qualified State (QS).

-

As outlined in the service delivery plan, infrastructure requirements must be substantiated as code (Infrastructure-as-Code). This code becomes a blueprint, accurately reflecting the state of the infrastructure.

-

A “continuous” qualification framework (following standard CI/CD practices), consistently deploys the infrastructure based on a specific blueprint in NON-PRD (DEV, TEST, QA) environments. At which point, automated testing could be performed, resulting in test execution reports.

-

IT Quality reviews and certifies the blueprints and test execution reports.

-

The qualified blueprints could be published as a Service Catalogue item, enabling enterprise re-use.

To ensure end-to-end quality, all associated Automation and Monitoring tools must also be qualified, empowering them to proactively deploy and maintain the Qualified State (QS).

Continuous Validation

Similar modern software development techniques could be applied to validation. The three steps outlined below are an example of how continuously validation could be achieved.

-

Automated testing and test-driven development (TDD) must be a core part of the software development lifecycle.

-

Functional tests become the backbone of the validation process, where product teams simultaneously write the validation scripts along with the code, executing the tests at regular intervals.

-

The regular execution of automated tests (before and after implementation) would result in an application that is continuously validated.

A common misconception is that regulators require tests to be executed by a human and physically signed. Using the FDA as an example, the requirement states that “objective evidence that software requirements describe the intended use and that the system meets those needs of the user.” Objective evidence could be achieved through a variety of techniques, including automation.

Conclusion

Regardless of the defined compliance and/or regulatory requirements, I believe every business should aim to proactively embed quality (Quality by Design), making it a continuous part of the service delivery approach. Where viable, software-defined techniques and automation could significantly improve quality, helping to drive higher accuracy and consistency, whilst also unlocking business agility.