GeForce RTX

On 20th August, NVIDIA revealed their next generation consumer graphics hardware, known as the GeForce RTX 20 series.

The series launched with three consumer graphics cards, the GeForce RTX 2070, GeForce RTX 2080, and the flagship GeForce RTX 2080 Ti. These cards started to hit the market in September, but are likely to remain in short supply throughout 2018.

The GeForce RTX 20 series introduced the Turing architecture, fabricated by TSMC on their 12nm FFN (FinFET NVIDIA) process. The series will eventually supersede the current Pascal architecture found in the popular GeForce GTX 10 series. Therefore, if you are interested in a GeForce GTX 10 series graphics card (e.g. Geforce GTX 1080), now would be a good time to purchase.

NVIDIA state that the Turing architecture represents the biggest technical leap in over a decade, enabling major advances in efficiency and performance for PC gaming, professional graphics applications, and deep learning inferencing.

The Turing architecture has similar clock-speeds to the previous generation but includes an increase in the CUDA core count (average of 15% across the series), as well as a redesigned Streaming Multiprocessor (SM) architecture. These changes should enable greater shading efficiency, resulting in up to a 50% performance improvement per CUDA Core.

However, the real headline of the Turing architecture is the inclusion of RT and Tensor Cores.

-

RT Cores are accelerator units that are dedicated to performing ray tracing operations. They can be leveraged by systems and interfaces such as NVIDIA’s RTX ray tracing technology, and APIs such as Microsoft DXR, NVIDIA OptiX, and Vulkan ray tracing.

-

Tensor Cores power a suite of new deep learning-based neural services for games and professional graphics, in addition to providing fast AI inferencing for cloud-based systems. An example of this technology is the new Deep Learning Super Sampling (DLSS) capability, which aims to improve gaming image quality, whilst reducing the performance impact when compared against existing techniques.

NVIDIA believes the inclusion of RT and Tensor Cores will enable hybrid rendering, where ray tracing is combined with traditional rasterisation to exploit the strengths of both technologies.

The rest of this article will provide more detail regarding the potential impact of the RT and Tensor Cores, as well as my initial thoughts of the PNY GeForce RTX 2080 Ti 11GB XLR8 Gaming Overclocked Edition graphics card.

If you are interested in the technical details of the Turing architecture, I would recommend reviewing the following two sources:

RT Cores

Real-time computer graphics have long used a technique known as rasterisation, where objects on the screen are created from a mesh of virtual triangles, or polygons, that create 3D models of objects.

Over the years, developers have become very efficient at rasterisation, achieving some incredible results as demonstrated in modern Triple-A games such as Red Dead Redemption 2, The Witcher 3, Far Cry 5, etc.

Unfortunately, rasterisation does have limitations. For example, due to the way rasterisation draws a scene, it can not correctly model the behaviour of a mirror or glass. This is because it only draws objects, it does not track the light itself.

In 1969, Arthur Appel (IBM) wrote the whitepaper “Some Techniques for Shading Machine Renderings of Solids”, which described a technique to use ray tracing to synthesise images in computer graphics.

Ray tracing is a rendering technique that can produce incredibly realistic lighting effects. In short, an algorithm can trace the path of light and then simulate the way that the light interacts with virtual objects in a computer-generated world.

This level of accuracy is considered by many as the “holy grail” of computer-generated images, producing results that are nearly indistinguishable from the real world.

Unfortunately, ray tracing is computationally expensive and (up until now) has been considered unfeasible for consumer hardware. The Turing architecture aims to change this, which (if successful) would have a profound impact on game development.

The video below is an example of ray tracing being used for reflections, powered by the Unreal Engine 4.

NVIDIA has a number of additional ray tracing video demonstrations available on their website.

Tensor Cores

As you might be aware, Tensor Cores are a key technology to a number of NVIDIA initiatives, specifically the Volta architecture used for Artificial Intelligence (AI).

The Turing architecture carries over the Tensor Cores from Volta, helping to speed up ray tracing by reducing the number of rays required in a scene by using AI denoising (a strength area of Tensor Cores).

Tensor Cores are also used to enable other capabilities, for example, Deep Learning Super Sampling (DLSS).

DLSS is a proprietary technology that uses deep learning to deliver better image quality at a lower cost than traditional Anti-Aliasing (AA) techniques, such as Temporal Anti-Aliasing (TAA).

The video below provides a good example of the DLSS vs. TAA.

Arguably TAA still produces a sharper image, however, the performance differences are certainly compelling (up to 33%).

PNY GeForce RTX 2080 Ti

Similar to the Geforce GTX 10 series launch, NVIDIA has released “founders editions” of the Geforce RTX 20 series. Unlike the Geforce GTX 10 series, each card includes a modest factory overclock. For example, the GeForce RTX 2080 Ti includes a boost clock of 1635MHz (up from the reference 1545MHz).

I have been testing the PNY GeForce RTX 2080 Ti 11GB XLR8 Gaming Overclocked Edition graphics card, which includes the same factory overclock as the founders edition. The full specification can be found below:

- PNY Part Number: VCG2080T11TFMPB-O

- Card Dimensions: 1.50” × 11.88” × 4.50”

- CUDA Cores: 4352

- Clock Speed: 1350MHz

- Boost Speed: 1635MHz

- Memory Speed: 14Gbps

- Memory Size: 11GB GDDR6

- Memory Interface: 352-bit

- Memory Bandwidth: 616GB/sec

- I/O: 3x DisplayPort 1.4, 1x HDMI 2.0b, 1x USB Type-C

- Power Input: 2x 8-Pin

- TDP: 260W (Minimum 650W PSU)

The PNY card includes dual-slot cooling and is configured with two heatsinks and three fans. The cooling is 30cm in length, which is fairly large, extending 3cm past the PCB.

Common across all GeForce RTX cards, the PNY card includes an aluminium backplate. The card itself has a very subtle design, with no flashy extras such as RGB lighting (which I prefer).

The PNY card includes two 8-Pin power inputs, supporting the standard 260W TDP. However, expect the TDP to be higher when overclocking.

PNY decided to match the NVIDIA reference design for I/O, specifically 3x DisplayPort 1.4, 1x HDMI 2.0b, 1x USB Type-C. The USB Type-C can be used to simplify the use of VR Headsets.

Overall, the PNY card is very well built but lacks any unique selling point. PNY have chosen not to stray far from the NVIDIA reference design, even matching the modest overlock of the founders edition.

The two heatsink, three fan cooling design is definitely a benefit, which should result in greater overclocking potential. However, I suspect the results will be in line with other third-party cards that have chosen the same cooling configuration.

The RRP of the PNY card is £1299.99. At this price, I am not sure PNY have done enough to make their GeForce RTX 2080 Ti standout from the crowd. However, I have seen it on sale for £1199.99, which is more competitive when compared against other equivalent third-party cards.

Performance

I do not have a comprehensive test environment for graphics cards, therefore I would recommend referring to reviews from PC Perspective and Anandtech for a detailed analysis of the GeForce RTX 20 series.

With that said, I can confirm the GeForce RTX 2080 Ti is a beast! At 1440p and even 4K, it breezes through my modern game collection, generally above 100FPS at maximum image quality.

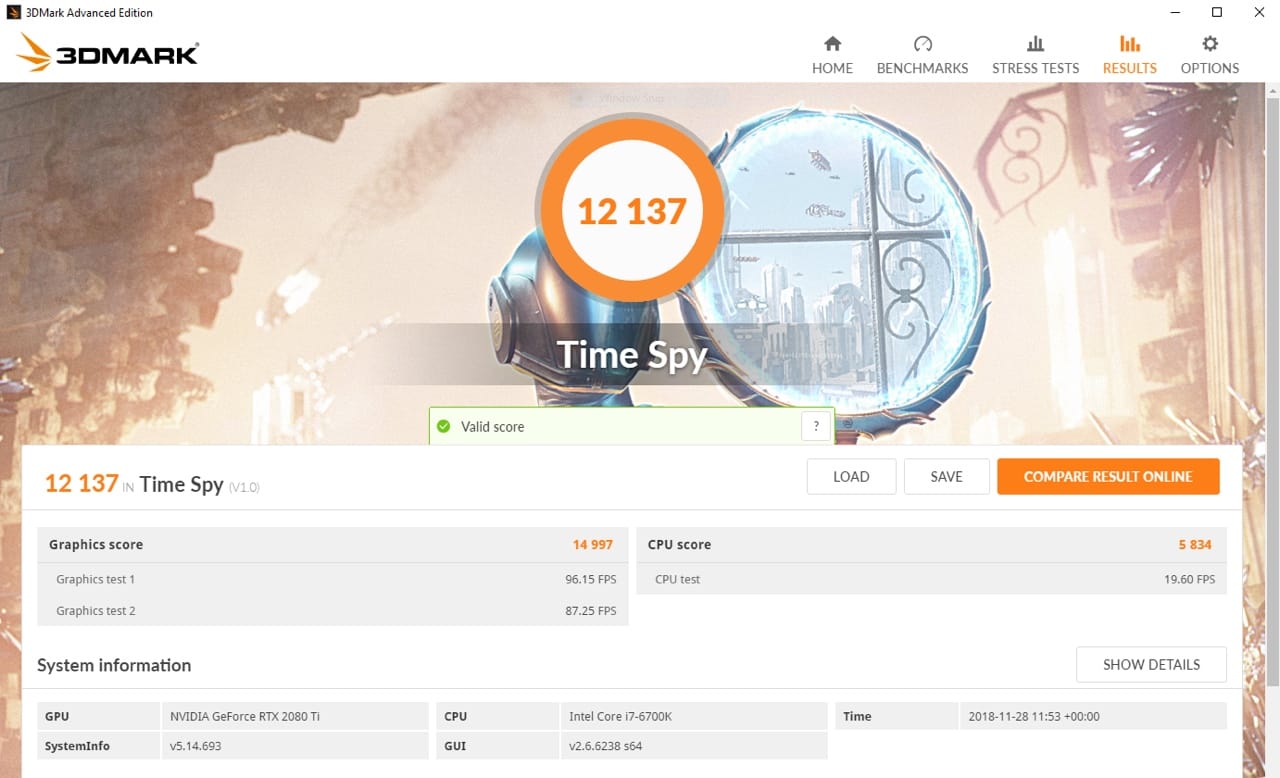

When added to my custom-built PC, the GeForce RTX 2080 Ti achieved a 3DMark score of 12137 (image below).

This is a healthy 71% performance improvement over the Gigabyte GeForce GTX 1080 it replaced. For reference, the GeForce GTX 1080 scored 7088.

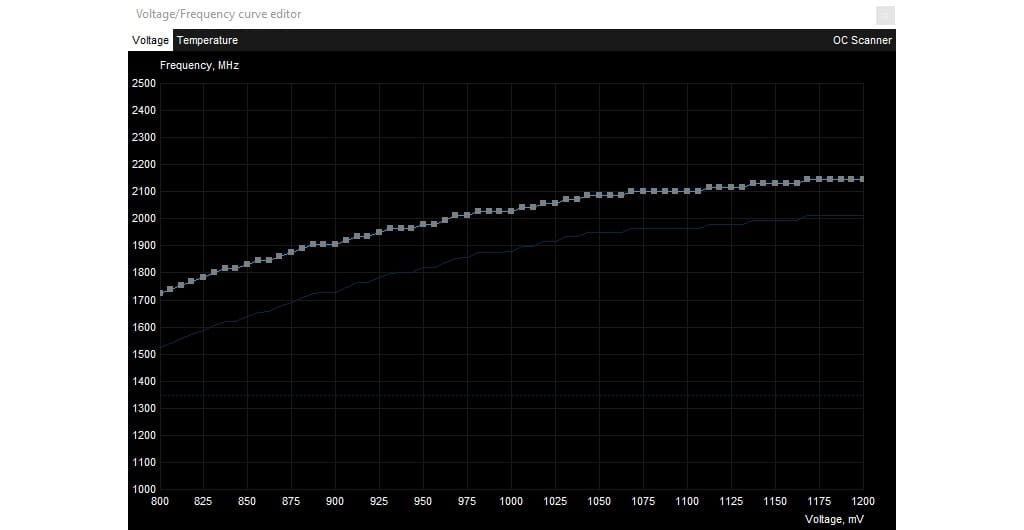

Alongside the GeForce RTX 20 series release, NVIDIA also introduced the NVIDIA OC Scanner, which enables automatic overclocking and stress testing.

The NVIDIA OC Scanner takes approximately 20 minutes to complete and produces a result that NVIDIA state will be stable for daily usage. This is a welcome inclusion, allowing for “one-click” overclocking that will likely meet the needs of all but the most hardcore overclockers.

To access the NVIDIA OC Scanner, you will need a compatible overclocking utility. At the time of writing, only MSI Afterburner had been updated to include the new feature. Thankfully, overclocking utilities are not vendor specific, therefore I expect a lot of options to become available over the coming months.

Outlined below are the results of the NVIDIA OC Scanner, producing a new power curve and a new maximum reported boost clock of 2070MHz (up from the factory 1635MHz).

Unfortunately, it was not possible to test ray tracing or DLSS outside of controlled or synthetic benchmarks. As a result, I can not comment on the touted quality or performance benefits. In theory, some games (e.g. Battlefield V, Shadow of the Tomb Raider) will start gaining support for these features later in 2018 via software patches.

Conclusion

The GeForce RTX 20 series is an interesting release from NVIDIA. I commend them for continuing to push the boundaries of technology, especially considering the lack of competition from AMD. For example, it would have been very easy for NVIDIA to become complacent, essentially re-badging the GeForce GTX 10 series, knowing that their market position would remain secure.

The impact of hybrid rendering (ray tracing and rasterisation) is significant, however, developers will need to embrace and mature the techniques before we start to see tangible results. This is a concern, as the GeForce RTX 20 series will likely be reserved for a small percentage of the gaming population, recognising the high cost of entry and the relatively small market share of PC gaming, when compared against console gaming (e.g. PlayStation, Xbox, etc.)

As a result, the incentive for developers to prioritise techniques such as ray tracing feels like a stretch in 2018 and maybe 2019. Instead, they will likely focus on techniques that target the mass market and remain compatible with the current generation of consoles (e.g. PlayStation 4 Pro, etc.) This was evident from the launch of the GeForce RTX 20 series, as there was no game support for ray tracing or DLSS.

In conclusion, I believe the GeForce RTX 20 series has the potential to be a “game-changer” (literally). However, the high price and lack of software support will likely frustrate most consumers, especially considering the tangible benefits are likely multiple years away from maturity.

With this in mind, the GeForce GTX 10 series starts to look like a better deal (today), especially now that the cryptocurrency bubble has burst, resulting in more competitive pricing. The question is how long the stock will last, therefore, if you are in the market for a new graphics card, I would recommend moving quickly on a GeForce GTX 1070, 1080 or 1080 Ti.