Databricks

Is it possible to simplify big data and artificial intelligence?

This is the mission of Databricks, a company founded by the team that started Apache Spark.

What is Apache Spark?

Apache Spark is an open-source cluster-computing framework, which provides an interface for programming clusters with implicit data parallelism and fault tolerance. Spark facilitates the implementation of iterative algorithms, which can be used to train machine learning systems.

In production, Spark requires two key components, a cluster manager (e.g. Hadoop YARN, Apache Mesos) and a distributed storage system (e.g. Hadoop Distributed File System, Cassandra).

Why Databricks?

Apache Spark is incredibly powerful, but can also be extremely complicated. As a result, Databricks developed a web-based data platform, built on top of Apache Spark, that enables “anyone” to build and deploy advance analytics services.

For example, if you needed to compute the average of fifty numbers, you could easily leverage Microsoft Excel to calculate the answer. This process could be achieved in a few simply clicks, with the results being produced almost instantly. However, if you needed to achieve the same simple outcome with a petabyte of data, the process would be infinitely more complicated, likely requiring specialist skills and a much longer lead time.

In short, Databricks are trying to remove the complexities that come with the word “big”, when dealing with big data, allowing companies to quickly and easily extract meaningful insight from their data.

Databricks Unified Analytics Platform

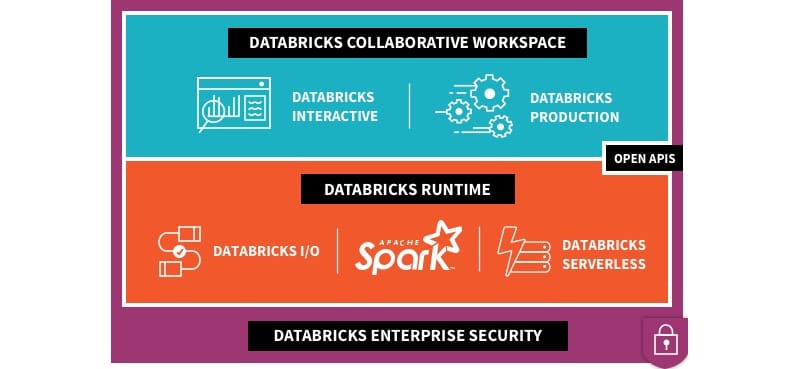

The Databricks Unified Analytics Platform aims to provide a consistent workspace for all stakeholders (data science, engineering and the business), enabling teams to quickly build data pipelines, train machine learning models and share insights all from one environment. This includes interactive notebooks (supporting R, Python, Scala and SQL), customisable visualisations and dashboards, as well as the ability to integrate with DevOps Tools such as GitHub for version control.

As part of the platform, the Databricks Runtime delivers an optimised version of Spark, leveraging technologies such as Amazon S3 as an access layer, as well as providing more efficient decoding, caching and data skipping to improve I/O performance.

Finally, the use of “Serverless Computing” and the fact the platform is delivered as a managed service, helps to drive down cost (through utility compute) and reduces the infrastructure and security overhead.

The diagram below provides a high-level overview of the Databricks Unified Analytics Platform.

Databricks can be deployed to Amazon Web Services (AWS) or Microsoft Azure.

I increasingly see the underlying compute and storage infrastructure as a commodity, therefore I would argue that deployment decisions should be made based on the data, not the infrastructure (assuming competitive pricing).

For example, in healthcare, research companies are increasingly making use of public data sets, which have the potential to be a gold mine for anyone that can extract insight. Ever increasingly these large data sets are persisted in public cloud infrastructure (e.g. AWS, Azure). As a result, the ability to deploy ephemeral environments will enable maximum agility, flexibility and low latency access to the data.

What is Databricks Delta?

On 25th October 2017 at the Spark Summit, Databricks officially announced Databricks Delta, which is a product designed to simplify large-scale data management.

Databricks Delta targets companies who are struggling to manage their growing big data architecture, which usually includes multiple data warehouses, data lakes and streaming systems. A key challenge with this “hybrid” architecture is the Extract-Transform-Load (ETL) processes, which can be complex to build, error-prone and have high latency (impacting the integrity of downstream systems).

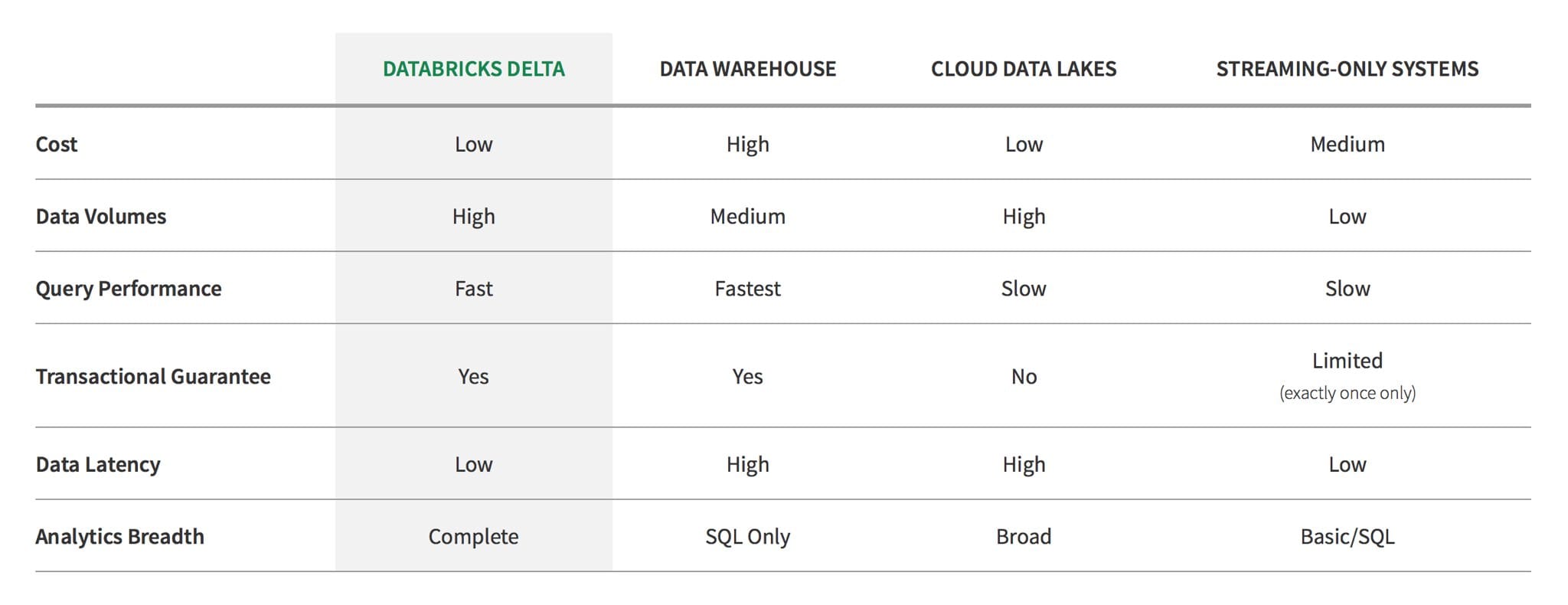

Databricks Delta compliments the Databricks Unified Analytics Platform, by delivering a single data management tool that combines the scale of a data lake, the reliability and performance of a data warehouse, and the low latency of streaming in a single system. The diagram below (Databricks Marketing) provides a basic comparison.

As outlined in the Databricks Delta blog post, the new architecture can be summarised in three parts:

-

The reliability and performance of a data warehouse: Delta supports transactional insertions, deletions, upserts, and queries; this enables reliable concurrent access from hundreds of applications. In addition, Delta automatically indexes, compacts and caches data; this achieves up to 100x improved performance over Apache Spark running over Parquet or Apache Hive on S3.

-

The speed of streaming systems: Delta transactionally incorporates new data in seconds and makes this data immediately available for high-performance queries using either streaming or batch.

-

The scale and cost-efficiency of a data lake: Delta stores data in cloud blob stores like S3. From these systems it inherits low cost, massive scalability, support for concurrent accesses, and high read and write throughput.

Conclusion

When people ask me, what technologies will change the world, it is hard to ignore the potential of artificial intelligence, driven by machine learning and big data.

Unfortunately, these concepts are still limited to a handful of technology pioneers, who have the funds, resources and expertise to execute at scale and drive towards a positive return on investment.

As a result, I am certainly supportive of the Databricks vision, to simplify big data. Recognising that the complexities of big data are a key barrier to mass-market adoption of artificial intelligence concepts.

Thanks to the close connection with the Apache Spark project and the growing community, I believe Databricks are well positioned to help simplify big data and with the introduction of Databricks Delta, they can now support the end-to-end journey from management to insights.